Quiz

probability of A given B and C (relative to P ). That is,

Quiz

simply picking a reasonable size and shoehorning the training data into vectors of that size" now with

large vectors or with multiple locations per feature in Feature hashing?

FEATURE HASHING

SGD-based classifiers avoid the need to predetermine vector size by simply picking a reasonable size

and shoehorning the training data into vectors of that size. This approach is known as feature

hashing. The shoehorning is done by picking one or more locations by using a hash of the name of

the variable for continuous variables or a hash of the variable name and the category name or word

for categorical, textlike, or word-like data.

This hashed feature approach has the distinct advantage of requiring less memory and one less pass

through the training data, but it can make it much harder to reverse engineer vectors to determine

which original feature mapped to a vector location. This is because multiple features may hash to the

same location. With large vectors or with multiple locations per feature, this isn't a problem for

accuracy but it can make it hard to understand what a classifier is doing.

An additional benefit of feature hashing is that the unknown and unbounded vocabularies typical of

word-like variables aren't a problem.

Quiz

SGD-based classifiers avoid the need to predetermine vector size by simply picking a reasonable size

and shoehorning the training data into vectors of that size. This approach is known as feature

hashing. The shoehorning is done by picking one or more locations by using a hash of the name of

the variable for continuous variables or a hash of the variable name and the category name or word

for categorical, textlike, or word-like data.

This hashed feature approach has the distinct advantage of requiring less memory and one less pass

through the training data, but it can make it much harder to reverse engineer vectors to determine

which original feature mapped to a vector location. This is because multiple features may hash to the

same location. With large vectors or with multiple locations per feature, this isn't a problem for

accuracy but it can make it hard to understand what a classifier is doing.

An additional benefit of feature hashing is that the unknown and unbounded vocabularies typical of

word-like variables aren't a problem.

Quiz

kernel trick), is a fast and space-efficient way of vectorizing features (such as the words in a

language), i.e., turning arbitrary features into indices in a vector or matrix. It works by applying a

hash function to the features and using their hash values modulo the number of features as indices

directly, rather than looking the indices up in an associative array. So what is the primary reason of

the hashing trick for building classifiers?

This hashed feature approach has the distinct advantage of requiring less memory and one less pass

through the training data, but it can make it much harder to reverse engineer vectors to determine

which original feature mapped to a vector location. This is because multiple features may hash to the

same location. With large vectors or with multiple locations per feature, this isn't a problem for

accuracy but it can make it hard to understand what a classifier is doing.

Models always have a coefficient per feature, which are stored in memory during model building.

The hashing trick collapses a high number of features to a small number which reduces the number

of coefficients and thus memory requirements. Noisy features are not removed; they are combined

with other features and so still have an impact.

The validity of this approach depends a lot on the nature of the features and problem domain;

knowledge of the domain is important to understand whether it is applicable or will likely produce

poor results. While hashing features may produce a smaller model, it will be one built from odd

combinations of real-world features, and so will be harder to interpret.

An additional benefit of feature hashing is that the unknown and unbounded vocabularies typical of

word-like variables aren't a problem.

Quiz

environments such as cell-phones.

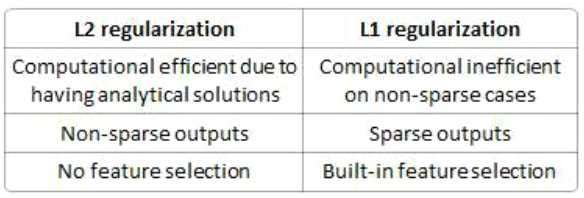

The two most common regularization methods are called L1 and L2 regularization. L1 regularization

penalizes the weight vector for its L1-norm (i.e. the sum of the absolute values of the weights),

whereas L2 regularization uses its L2-norm. There is usually not a considerable difference between

the two methods in terms of the accuracy of the resulting model (Gao et al 2007), but L1

regularization has a significant advantage in practice. Because many of the weights of the features

become zero as a result of L1-regularized training, the size of the model can be much smaller than

that produced by L2-regularization. Compact models require less space on memory and storage, and

enable the application to start up quickly. These merits can be of vital importance when the

application is deployed in resource-tight environments such as cell-phones.

Regularization works by adding the penalty associated with the coefficient values to the error of the

hypothesis. This way, an accurate hypothesis with unlikely coefficients would be penalized whila a

somewhat less accurate but more conservative hypothesis with low coefficients would not be

penalized as much.

81

Quiz

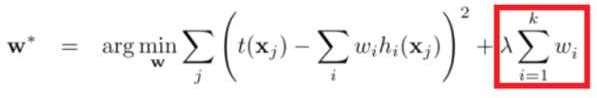

Mathematically speaking, it adds a regularization term in order to prevent the coefficients to fit so

perfectly to overfit. The difference between the L1 and L2 is...

Regularization is a very important technique in machine learning to prevent overfitting.

Mathematically speaking, it adds a regularization term in order to prevent the coefficients to fit so

perfectly to overfit. The difference between the L1 and L2 is just that L2 is the sum of the square of

the weights, while L1 is just the sum of the weights. As follows: L1 regularization on least squares:

Quiz

Explanation :

The difference between their properties can be promptly summarized as follows:

Quiz

Optimizing with a L1 regularization term is harder than with an L2 regularization term because

Regularization is a very important technique in machine learning to prevent overfitting.

Mathematically speaking, it adds a regularization term in order to prevent the coefficients to fit so

perfectly to overfit. The difference between the L1 and L2 is just that L2 is the sum of the square of

the weights, while L1 is just the sum of the weights.

Much of optimization theory has historically focused on convex loss functions because they're much

easier to optimize than non-convex functions: a convex function over a bounded domain is

guaranteed to have a minimum, and it's easy to find that minimum by following the gradient of the

function at each point no matter where you start. For non-convex functions, on the other hand,

where you start matters a great deal; if you start in a bad position and follow the gradient, you're

likely to end up in a local minimum that is not necessarily equal to the global minimum.

You can think of convex functions as cereal bowls: anywhere you start in the cereal bowl, you're likely

to roll down to the bottom. A non-convex function is more like a skate park: lots of ramps, dips, ups

and downs. It's a lot harder to find the lowest point in a skate park than it is a cereal bowl.

Quiz

makes use of several variables that may be......

Logistic regression is a model used for prediction of the probability of occurrence of an event. It

makes use of several predictor variables that may be either numerical or categories.

Quiz

Clustering is an example of unsupervised learning. The clustering algorithm finds groups within the

data without being told what to look for upfront. This contrasts with classification, an example of

supervised machine learning, which is the process of determining to which class an observation

belongs. A common application of classification is spam filtering. With spam filtering we use labeled

data to train the classifier: e-mails marked as spam or ham.

Databricks Certified Professional Data Scientist Practice test unlocks all online simulator questions

Thank you for choosing the free version of the Databricks Certified Professional Data Scientist practice test! Further deepen your knowledge on Databricks Simulator; by unlocking the full version of our Databricks Certified Professional Data Scientist Simulator you will be able to take tests with over 137 constantly updated questions and easily pass your exam. 98% of people pass the exam in the first attempt after preparing with our 137 questions.

BUY NOWWhat to expect from our Databricks Certified Professional Data Scientist practice tests and how to prepare for any exam?

The Databricks Certified Professional Data Scientist Simulator Practice Tests are part of the Databricks Database and are the best way to prepare for any Databricks Certified Professional Data Scientist exam. The Databricks Certified Professional Data Scientist practice tests consist of 137 questions and are written by experts to help you and prepare you to pass the exam on the first attempt. The Databricks Certified Professional Data Scientist database includes questions from previous and other exams, which means you will be able to practice simulating past and future questions. Preparation with Databricks Certified Professional Data Scientist Simulator will also give you an idea of the time it will take to complete each section of the Databricks Certified Professional Data Scientist practice test . It is important to note that the Databricks Certified Professional Data Scientist Simulator does not replace the classic Databricks Certified Professional Data Scientist study guides; however, the Simulator provides valuable insights into what to expect and how much work needs to be done to prepare for the Databricks Certified Professional Data Scientist exam.

BUY NOWDatabricks Certified Professional Data Scientist Practice test therefore represents an excellent tool to prepare for the actual exam together with our Databricks practice test . Our Databricks Certified Professional Data Scientist Simulator will help you assess your level of preparation and understand your strengths and weaknesses. Below you can read all the quizzes you will find in our Databricks Certified Professional Data Scientist Simulator and how our unique Databricks Certified Professional Data Scientist Database made up of real questions:

Info quiz:

- Quiz name:Databricks Certified Professional Data Scientist

- Total number of questions:137

- Number of questions for the test:50

- Pass score:80%

You can prepare for the Databricks Certified Professional Data Scientist exams with our mobile app. It is very easy to use and even works offline in case of network failure, with all the functions you need to study and practice with our Databricks Certified Professional Data Scientist Simulator.

Use our Mobile App, available for both Android and iOS devices, with our Databricks Certified Professional Data Scientist Simulator . You can use it anywhere and always remember that our mobile app is free and available on all stores.

Our Mobile App contains all Databricks Certified Professional Data Scientist practice tests which consist of 137 questions and also provide study material to pass the final Databricks Certified Professional Data Scientist exam with guaranteed success. Our Databricks Certified Professional Data Scientist database contain hundreds of questions and Databricks Tests related to Databricks Certified Professional Data Scientist Exam. This way you can practice anywhere you want, even offline without the internet.

BUY NOW