Quiz

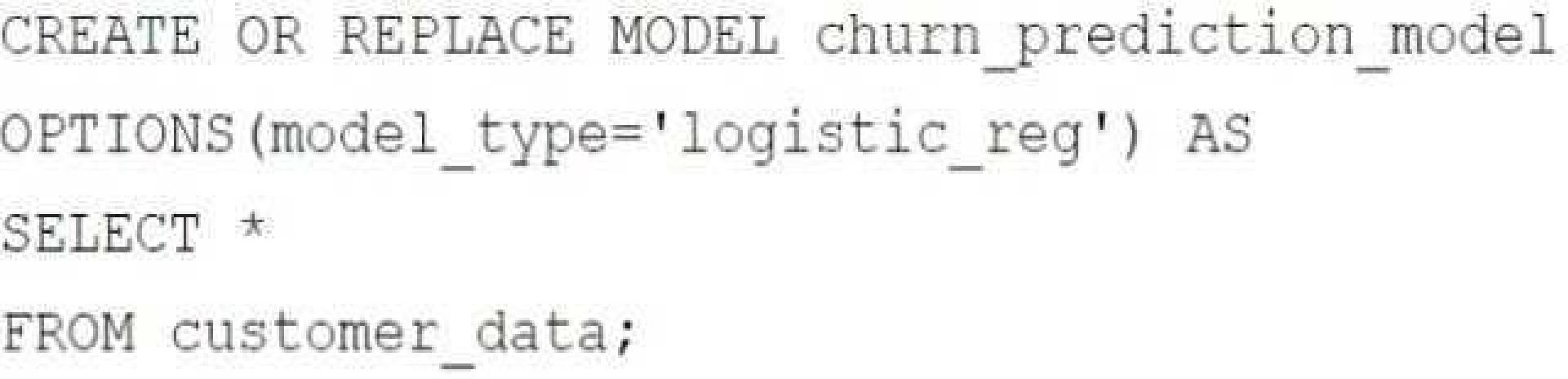

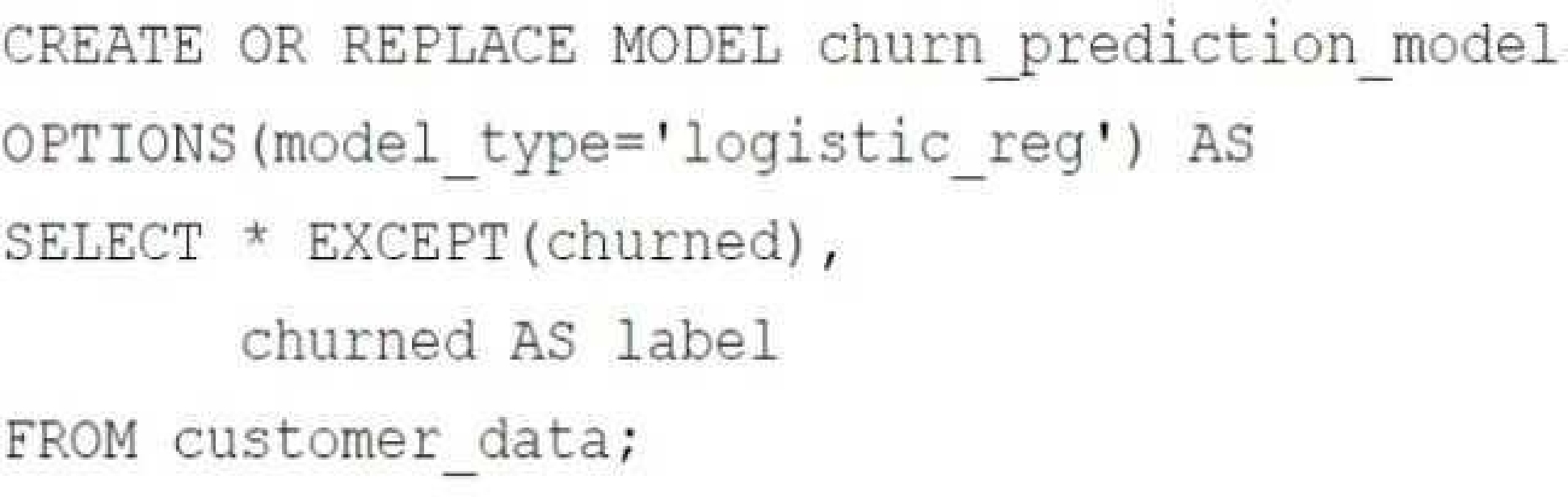

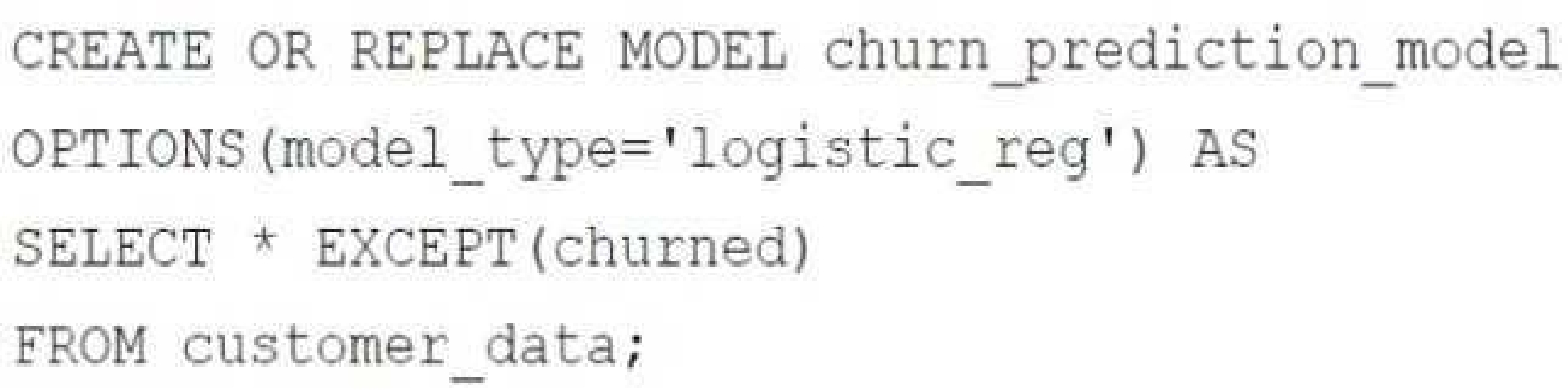

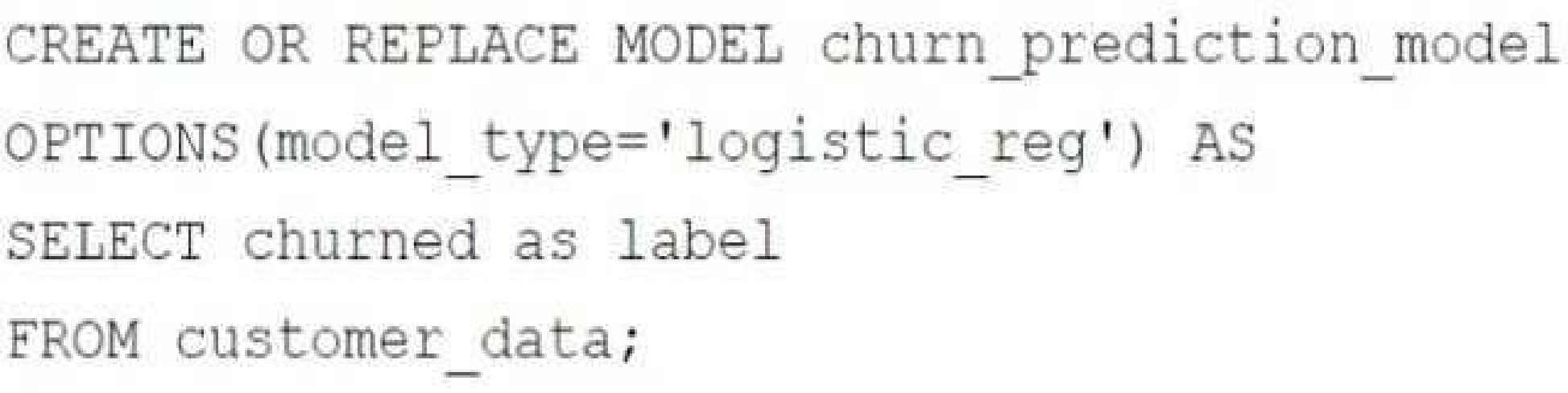

BigQuery. The dataset includes customer demographics, purchase history, and a label indicating

whether the customer churned or not. You want to build a machine learning model to identify

customers at risk of churning. You need to create and train a logistic regression model for predicting

customer churn, using the customer_data table with the churned column as the target label. Which

BigQuery ML query should you use?

A)

B)

C)

D)

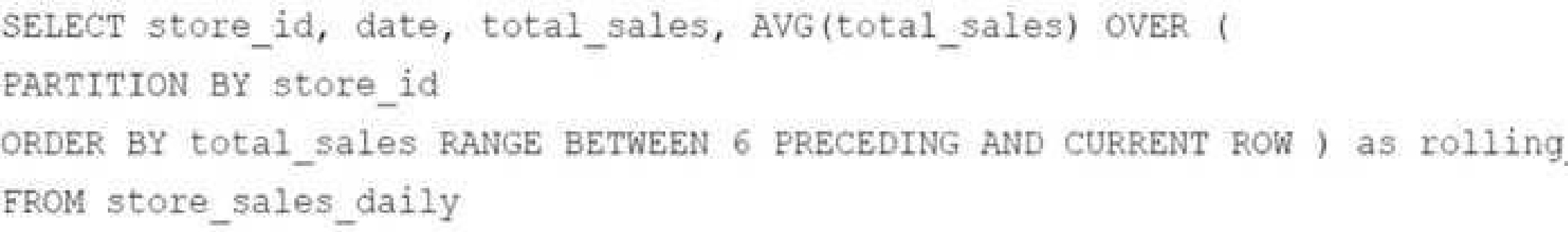

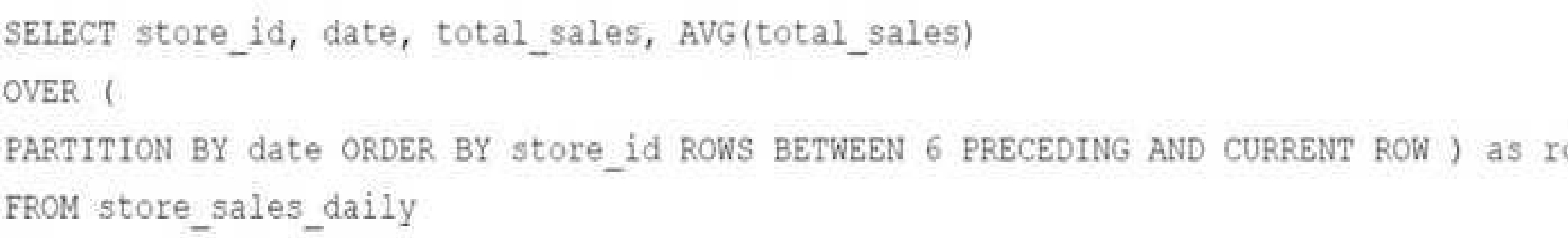

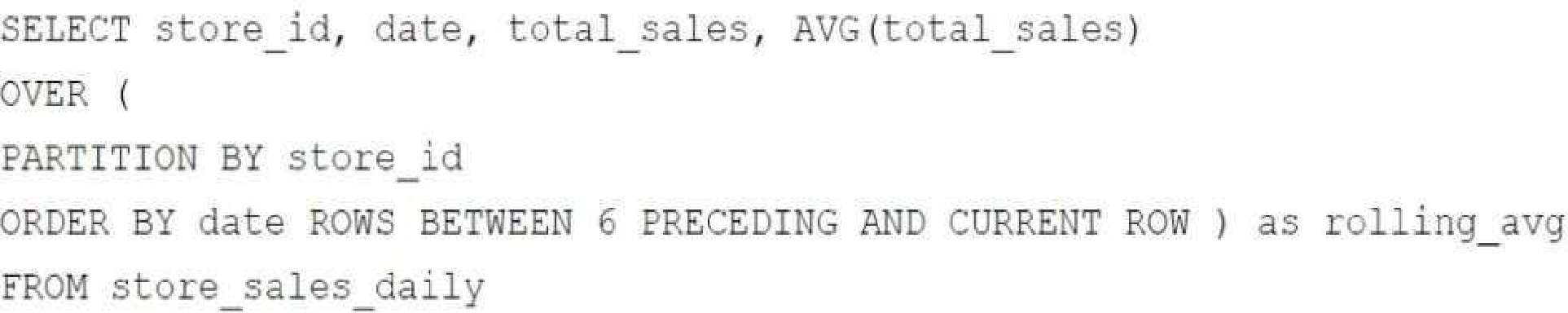

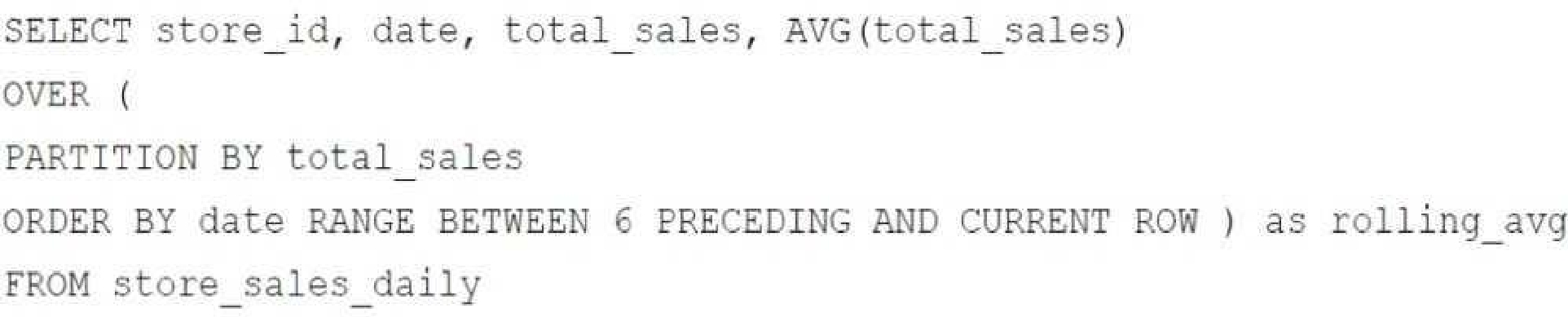

Quiz

each location each day. You want to use SQL to calculate the weekly moving average of sales by

location to identify trends for each store. Which query should you use?

A)

B)

C)

D)

Quiz

small appliances. You need to process messages arriving at a Pub/Sub topic, capitalize letters in the

serial number field, and write results to BigQuery. You want to use a managed service and write a

minimal amount of code for underlying transformations. What should you do?

Quiz

downstream reporting. You need to quickly build a scalable data pipeline that transforms the data

while providing insights into data quality issues. What should you do?

Quiz

temporary files are only needed for seven days, after which they are no longer needed. To reduce

storage costs and keep your bucket organized, you want to automatically delete these files once they

are older than seven days. What should you do?

Quiz

records with personally identifiable information (PII) such as names, addresses, and medical

diagnoses. You need a standardized managed solution that de-identifies PII across all your data feeds

prior to ingestion to Google Cloud. What should you do?

Quiz

backups. Your organization is subject to strict compliance regulations that mandate data immutability

for specific data types. You want to use an efficient process to reduce storage costs while ensuring

that your storage strategy meets retention requirements. What should you do?

Quiz

history, demographics, and website interactions. You need to build a machine learning (ML) model to

predict which customers are most likely to make a purchase in the next month. You have limited

engineering resources and need to minimize the ML expertise required for the solution. What should

you do?

Quiz

Data processing is performed in stages, where the output of one stage becomes the input of the next.

Each stage takes a long time to run. Occasionally a stage fails, and you have to address

the problem. You need to ensure that the final output is generated as quickly as possible. What

should you do?

Quiz

dataset with the team while minimizing the risk of unauthorized copying of dat

a. You also want to create a reusable framework in case you need to share this data with other teams

in the future. What should you do?

Google Cloud Certified - Associate Data Practitioner Practice test unlocks all online simulator questions

Thank you for choosing the free version of the Google Cloud Certified - Associate Data Practitioner practice test! Further deepen your knowledge on Google Simulator; by unlocking the full version of our Google Cloud Certified - Associate Data Practitioner Simulator you will be able to take tests with over 72 constantly updated questions and easily pass your exam. 98% of people pass the exam in the first attempt after preparing with our 72 questions.

BUY NOWWhat to expect from our Google Cloud Certified - Associate Data Practitioner practice tests and how to prepare for any exam?

The Google Cloud Certified - Associate Data Practitioner Simulator Practice Tests are part of the Google Database and are the best way to prepare for any Google Cloud Certified - Associate Data Practitioner exam. The Google Cloud Certified - Associate Data Practitioner practice tests consist of 72 questions and are written by experts to help you and prepare you to pass the exam on the first attempt. The Google Cloud Certified - Associate Data Practitioner database includes questions from previous and other exams, which means you will be able to practice simulating past and future questions. Preparation with Google Cloud Certified - Associate Data Practitioner Simulator will also give you an idea of the time it will take to complete each section of the Google Cloud Certified - Associate Data Practitioner practice test . It is important to note that the Google Cloud Certified - Associate Data Practitioner Simulator does not replace the classic Google Cloud Certified - Associate Data Practitioner study guides; however, the Simulator provides valuable insights into what to expect and how much work needs to be done to prepare for the Google Cloud Certified - Associate Data Practitioner exam.

BUY NOWGoogle Cloud Certified - Associate Data Practitioner Practice test therefore represents an excellent tool to prepare for the actual exam together with our Google practice test . Our Google Cloud Certified - Associate Data Practitioner Simulator will help you assess your level of preparation and understand your strengths and weaknesses. Below you can read all the quizzes you will find in our Google Cloud Certified - Associate Data Practitioner Simulator and how our unique Google Cloud Certified - Associate Data Practitioner Database made up of real questions:

Info quiz:

- Quiz name:Google Cloud Certified - Associate Data Practitioner

- Total number of questions:72

- Number of questions for the test:50

- Pass score:80%

You can prepare for the Google Cloud Certified - Associate Data Practitioner exams with our mobile app. It is very easy to use and even works offline in case of network failure, with all the functions you need to study and practice with our Google Cloud Certified - Associate Data Practitioner Simulator.

Use our Mobile App, available for both Android and iOS devices, with our Google Cloud Certified - Associate Data Practitioner Simulator . You can use it anywhere and always remember that our mobile app is free and available on all stores.

Our Mobile App contains all Google Cloud Certified - Associate Data Practitioner practice tests which consist of 72 questions and also provide study material to pass the final Google Cloud Certified - Associate Data Practitioner exam with guaranteed success. Our Google Cloud Certified - Associate Data Practitioner database contain hundreds of questions and Google Tests related to Google Cloud Certified - Associate Data Practitioner Exam. This way you can practice anywhere you want, even offline without the internet.

BUY NOW