Quiz

Overview

You are a data scientist in a company that provides data science for professional sporting events.

Models will be global and local market data to meet the following business goals:

• Understand sentiment of mobile device users at sporting events based on audio from crowd

reactions.

• Access a user's tendency to respond to an advertisement.

• Customize styles of ads served on mobile devices.

• Use video to detect penalty events.

Current environment

Requirements

• Media used for penalty event detection will be provided by consumer devices. Media may include

images and videos captured during the sporting event and snared using social media. The images

and videos will have varying sizes and formats.

• The data available for model building comprises of seven years of sporting event media. The

sporting event media includes: recorded videos, transcripts of radio commentary, and logs from

related social media feeds feeds captured during the sporting evens.

• Crowd sentiment will include audio recordings submitted by event attendees in both mono and

stereo

Formats.

Advertisements

• Ad response models must be trained at the beginning of each event and applied during the

sporting event.

• Market segmentation nxxlels must optimize for similar ad resporr.r history.

• Sampling must guarantee mutual and collective exclusivity local and global segmentation models

that share the same features.

• Local market segmentation models will be applied before determining a user’s propensity to

respond to an advertisement.

• Data scientists must be able to detect model degradation and decay.

• Ad response models must support non linear boundaries features.

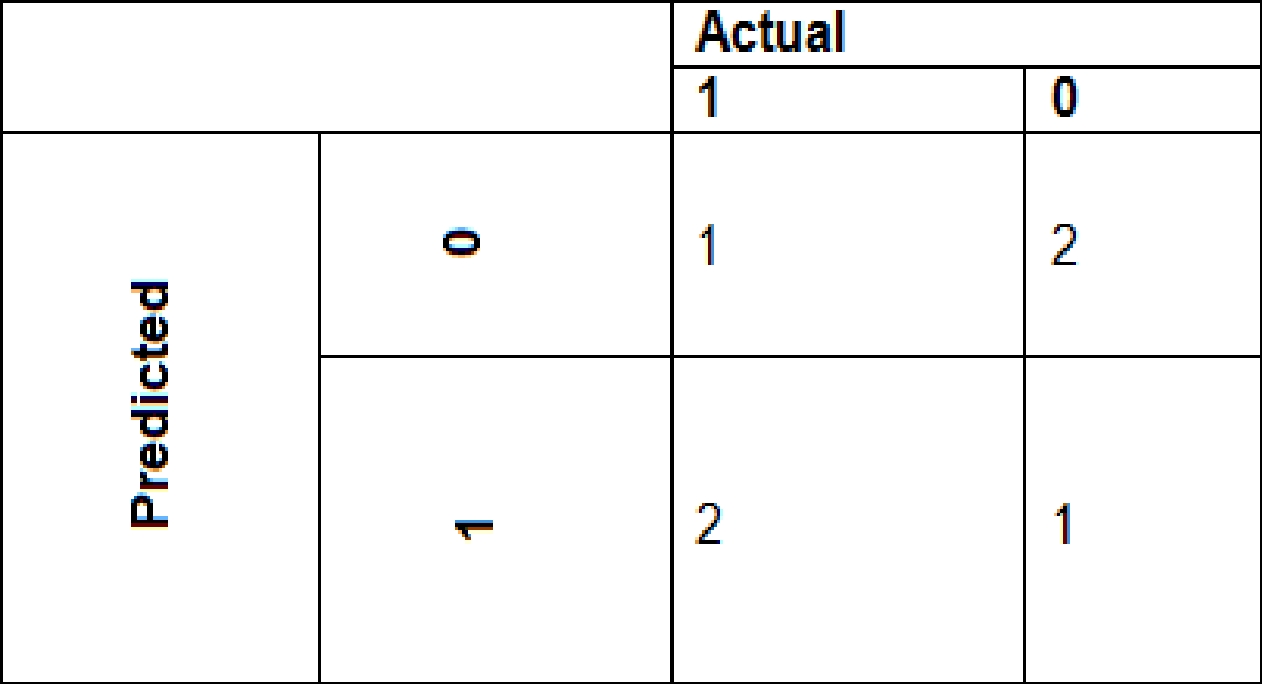

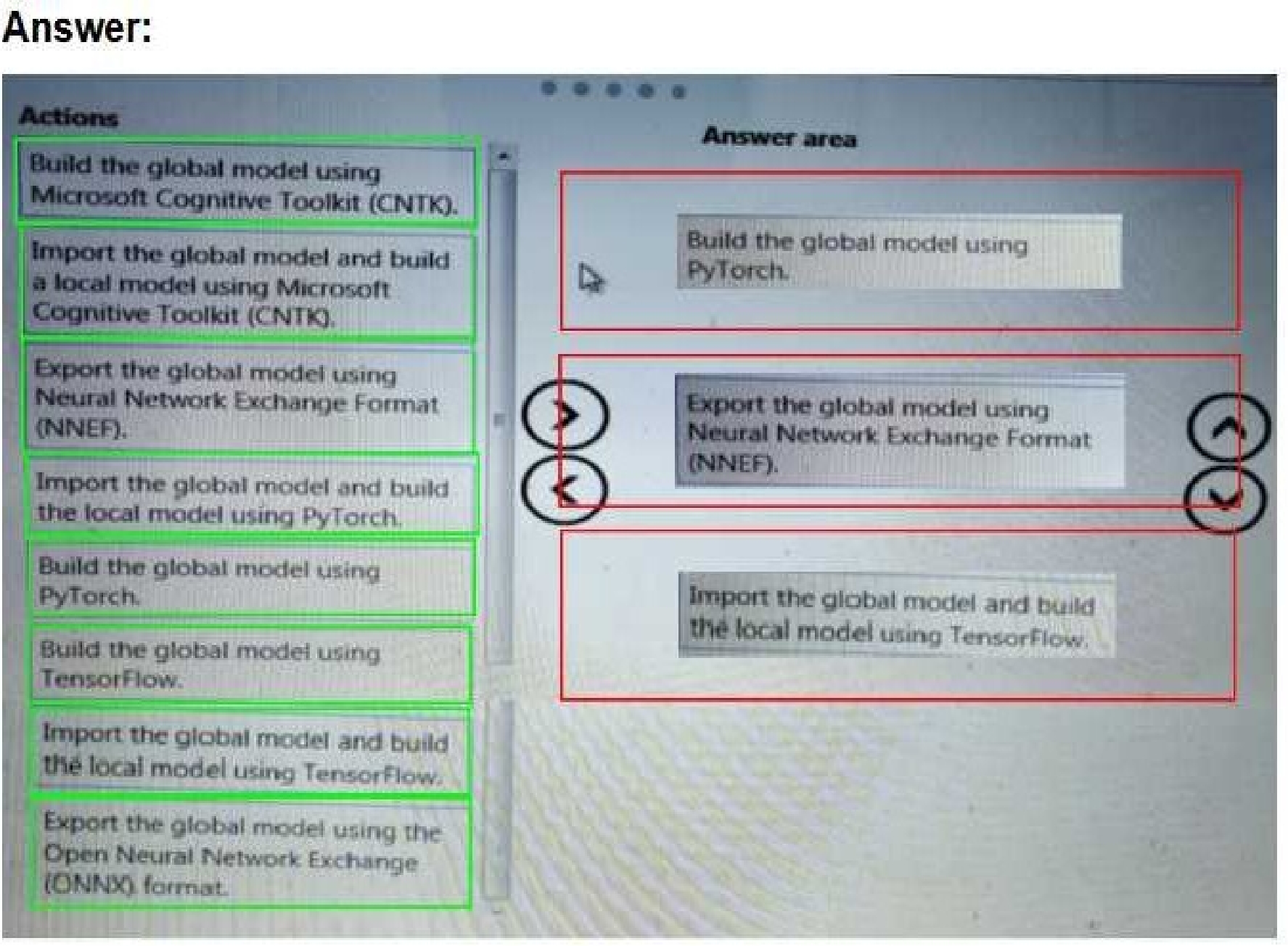

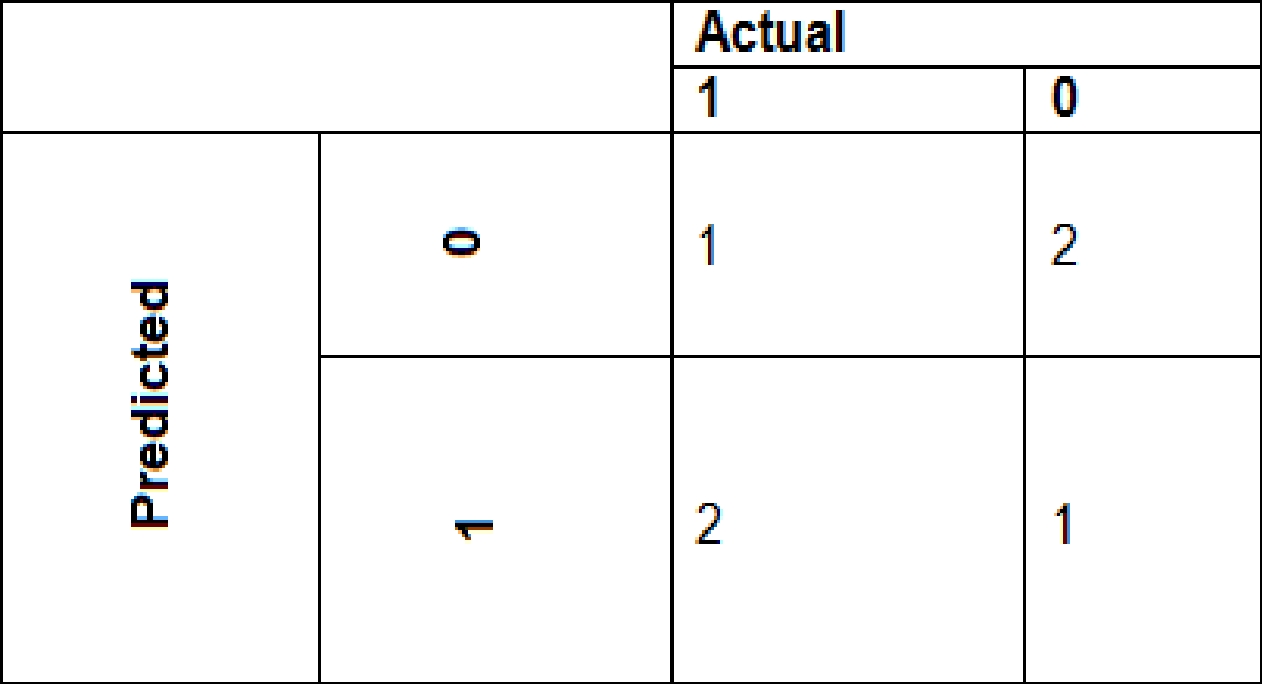

• The ad propensity model uses a cut threshold is 0.45 and retrains occur if weighted Kappa

deviates from 0.1 +/-5%.

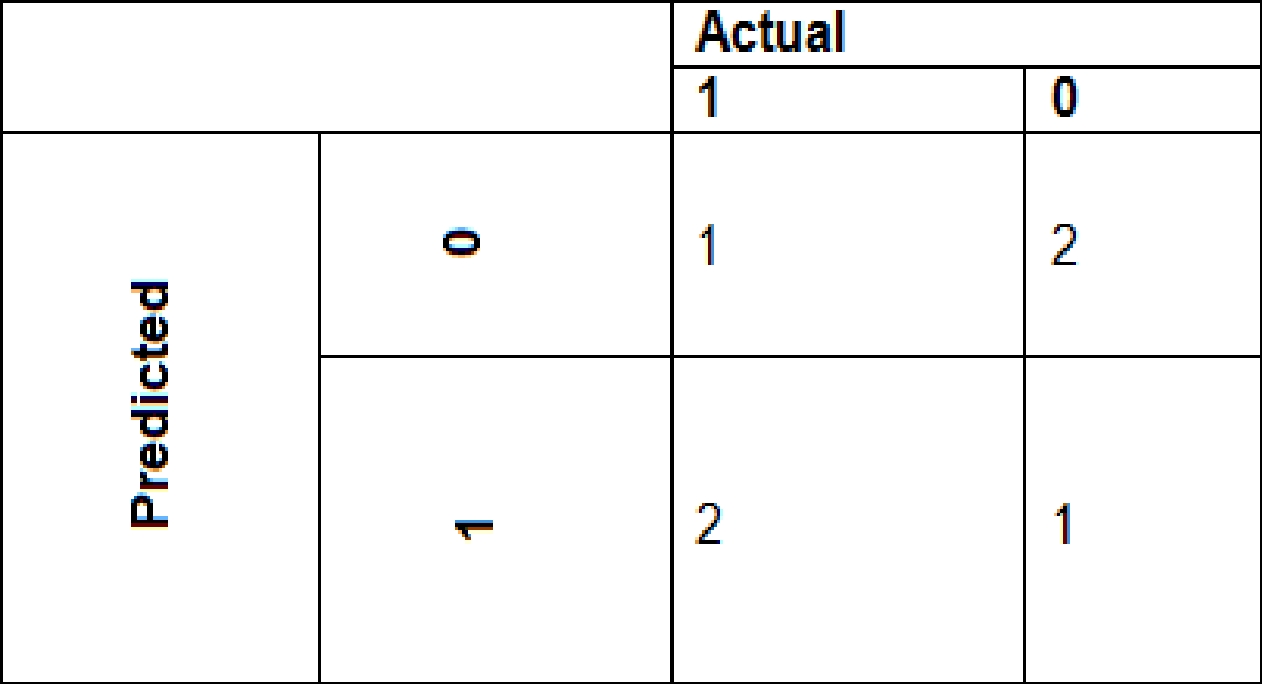

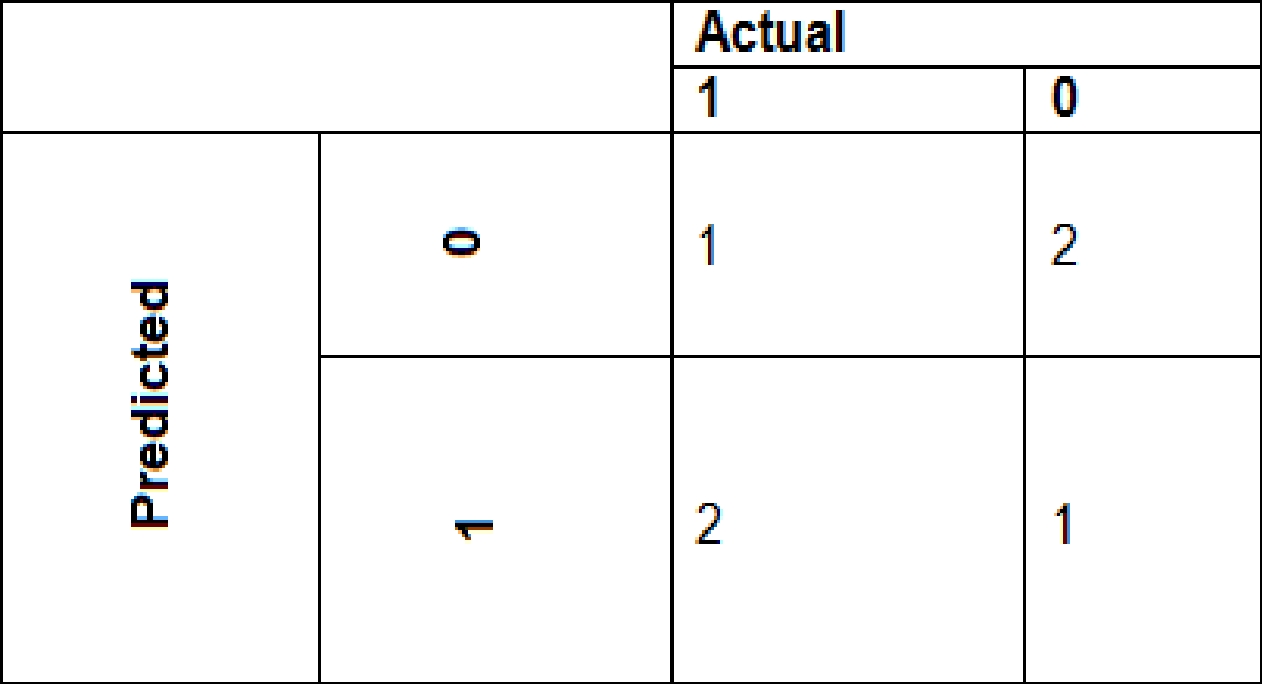

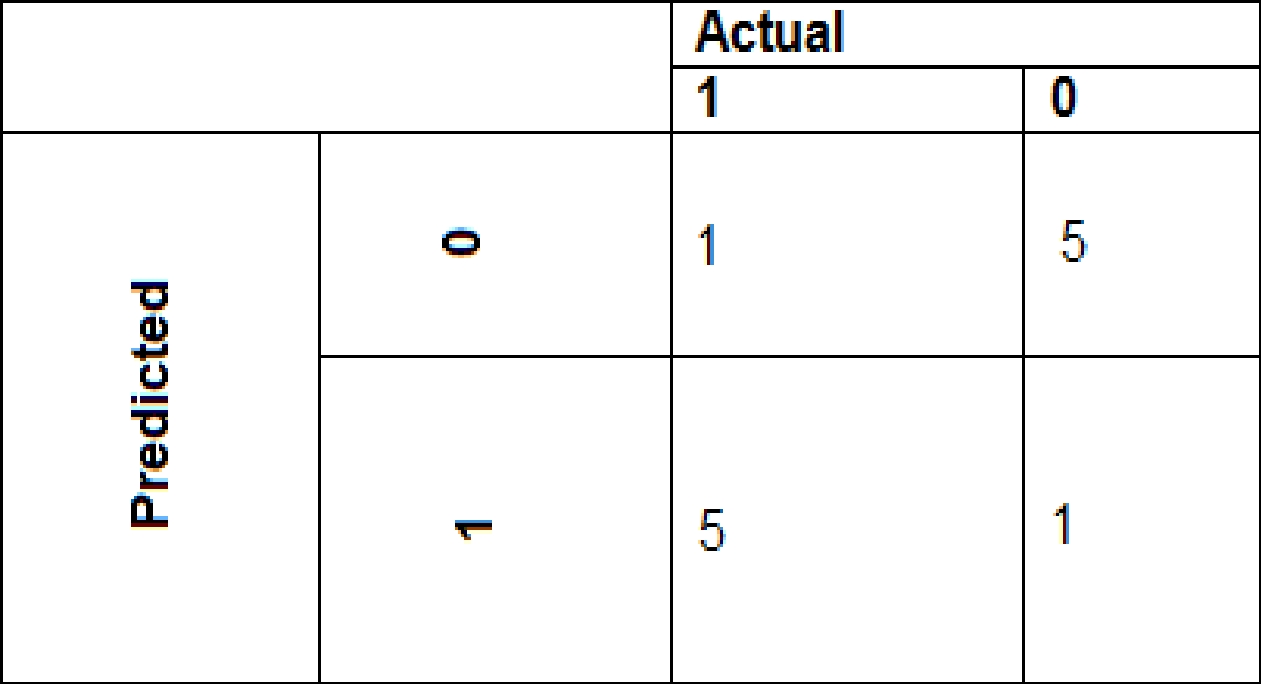

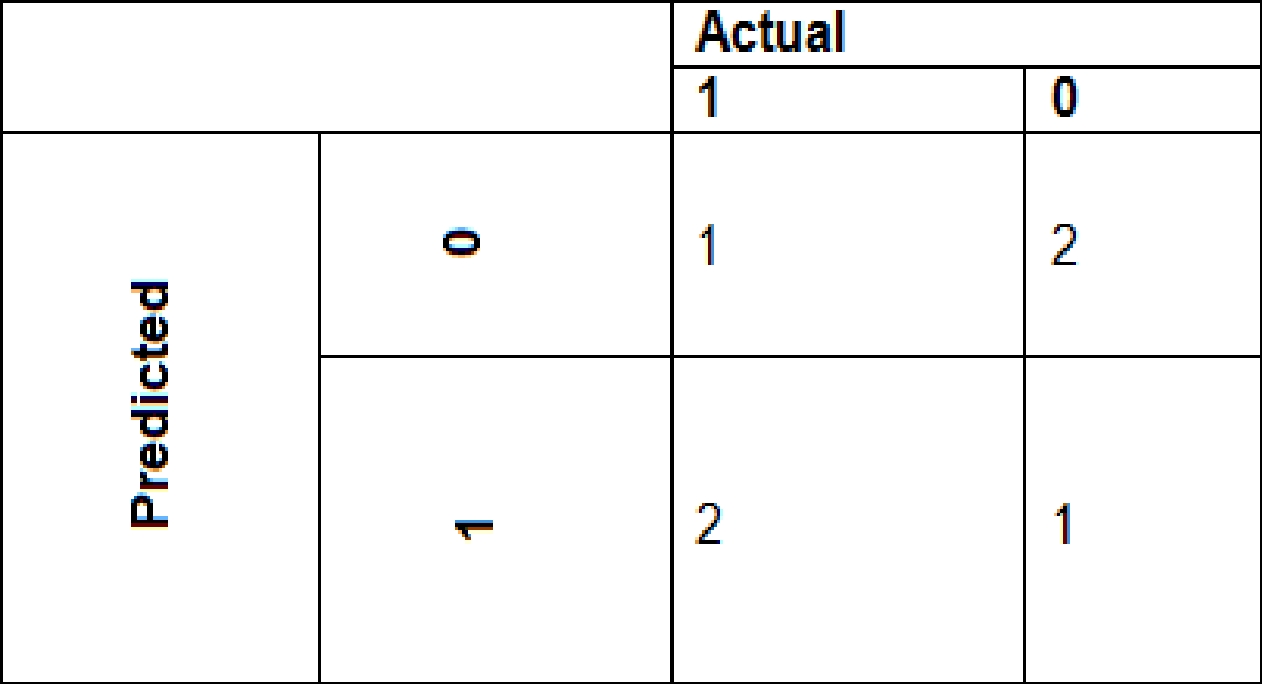

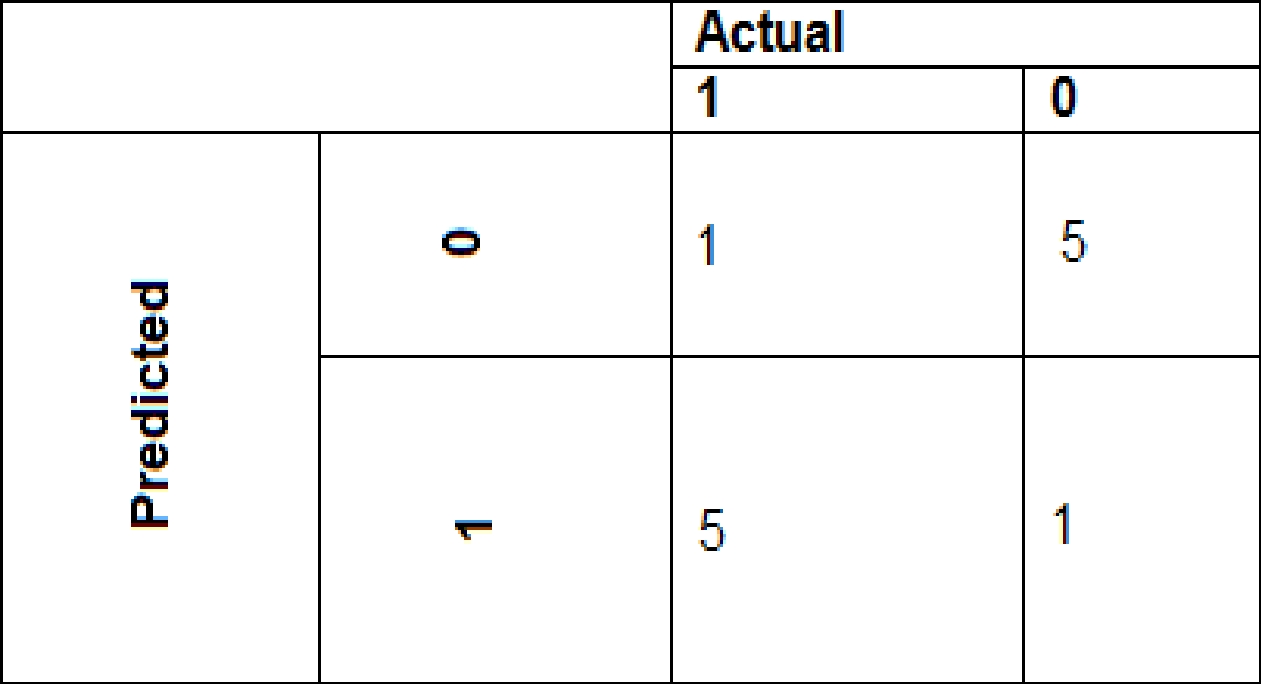

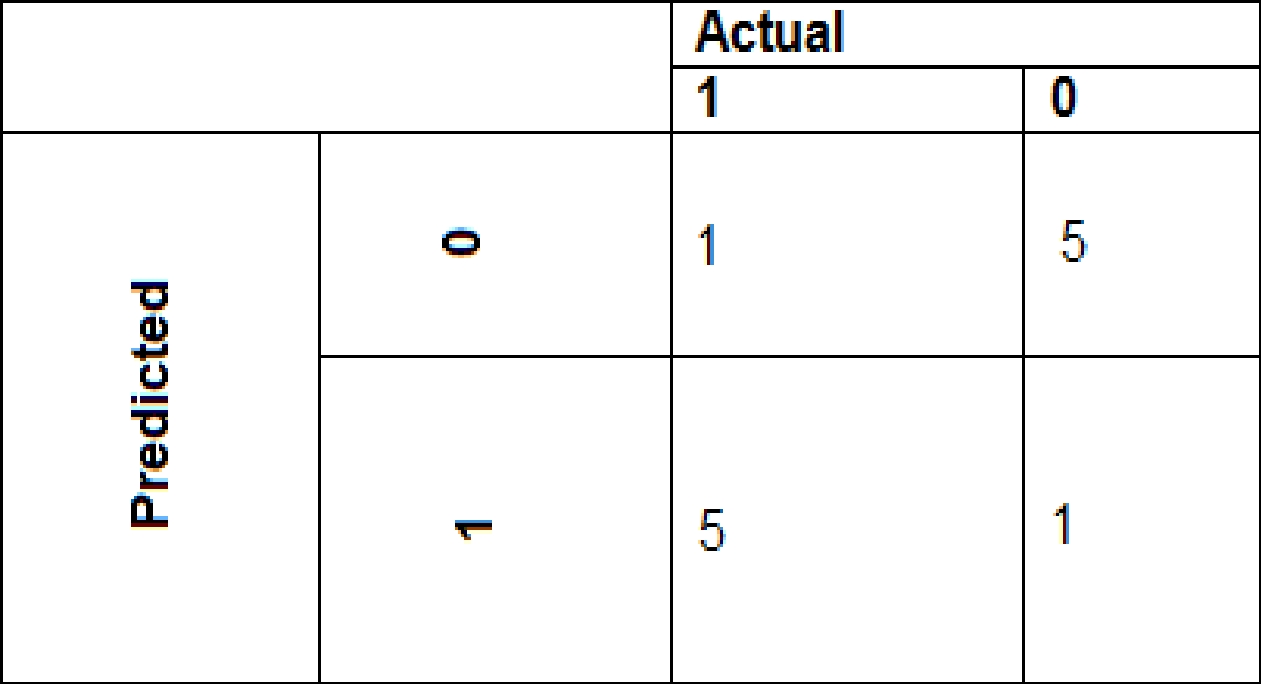

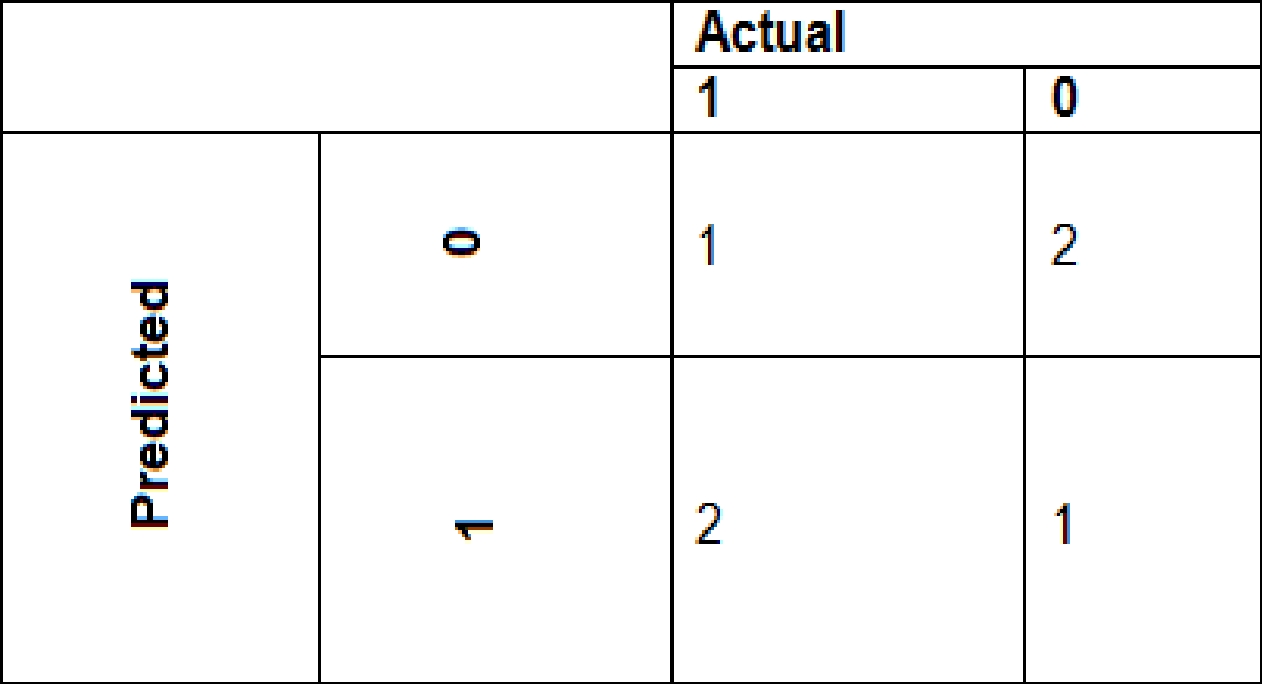

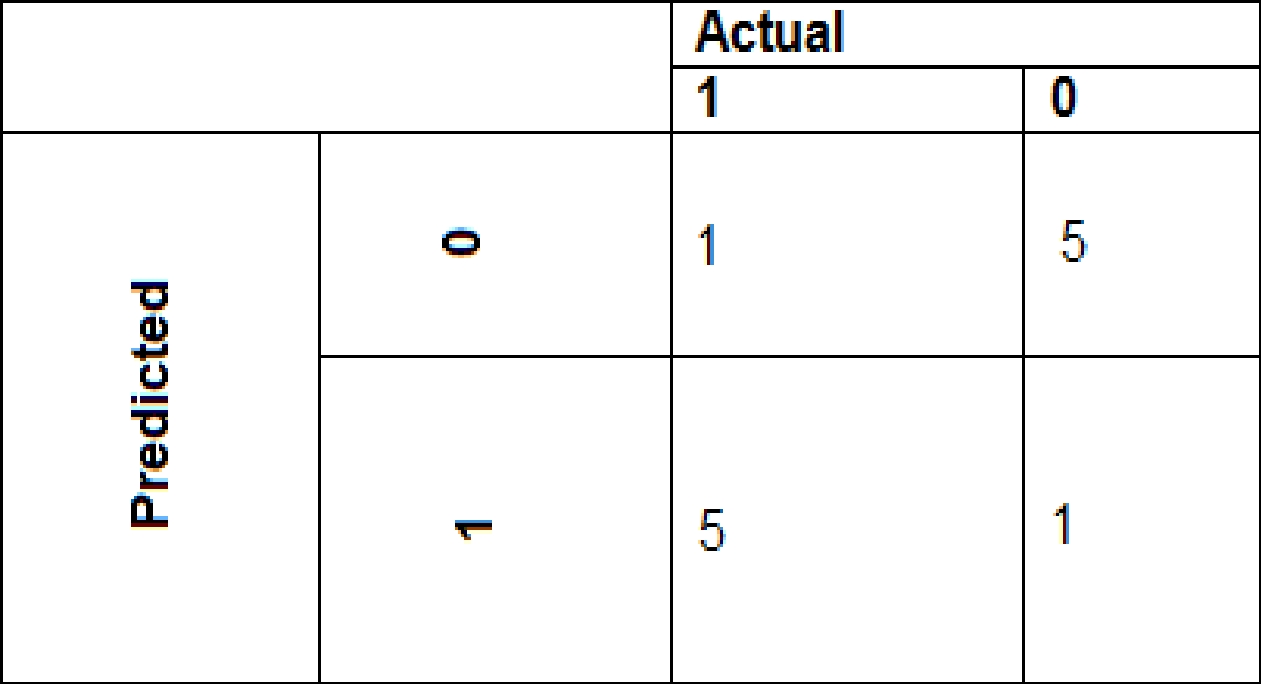

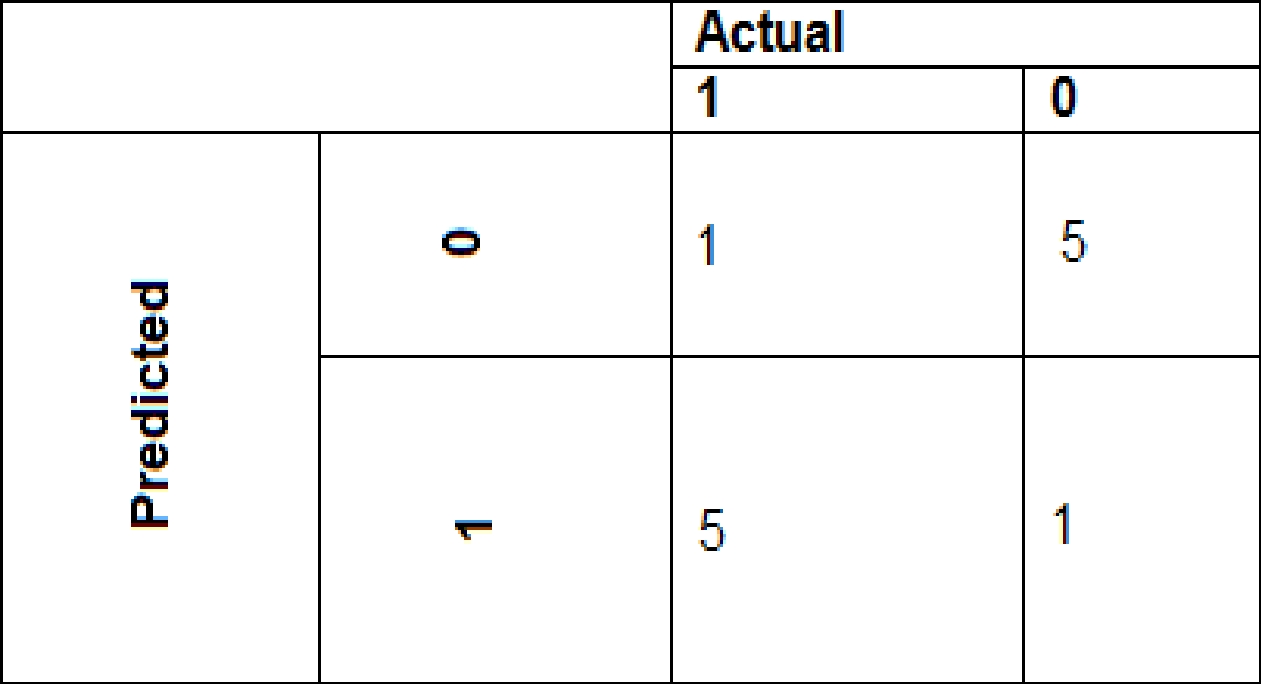

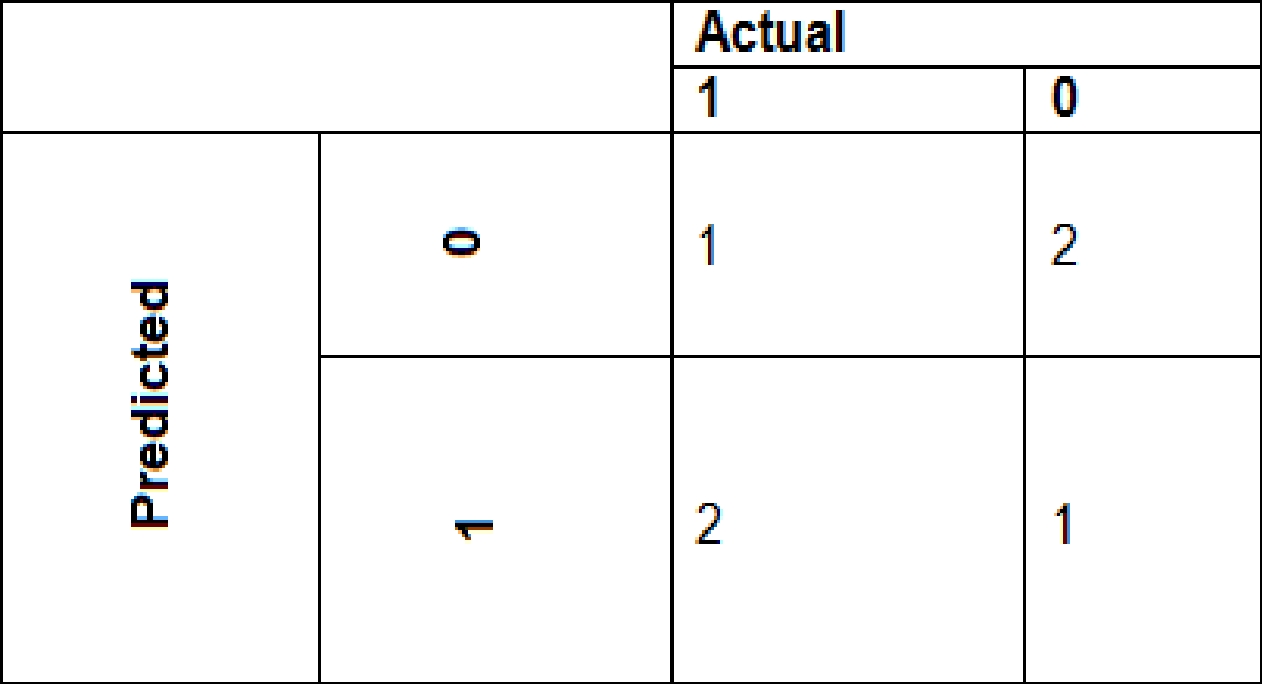

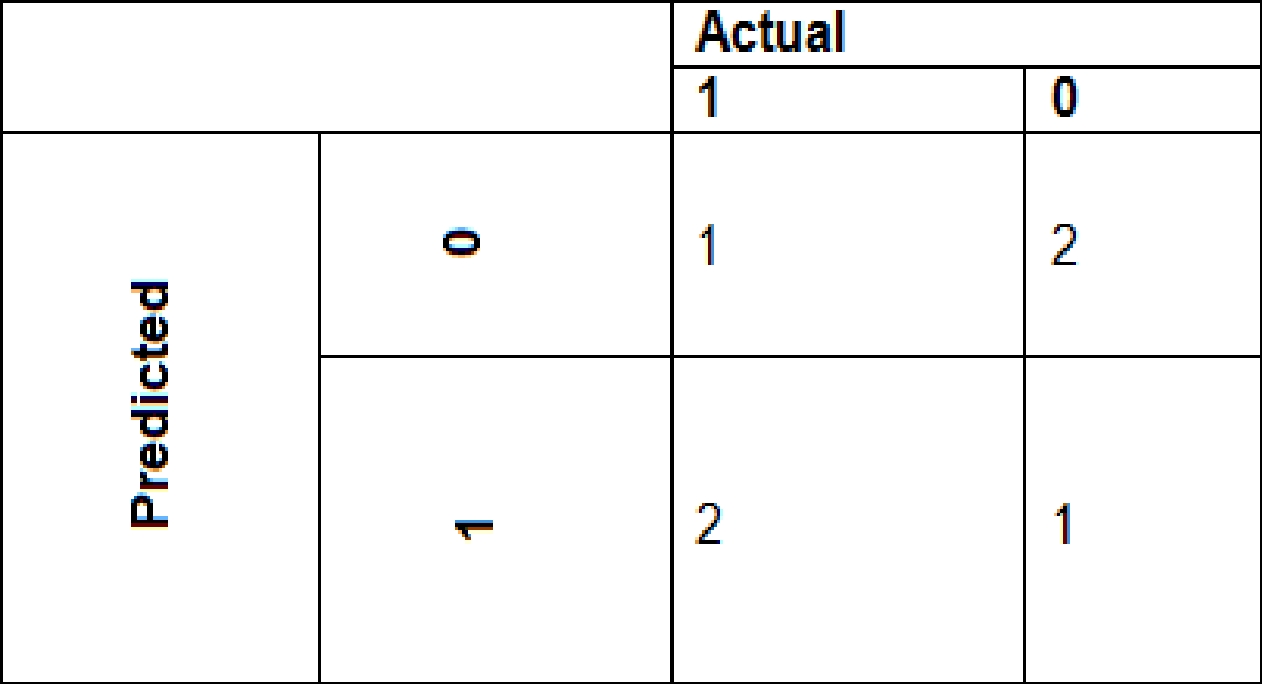

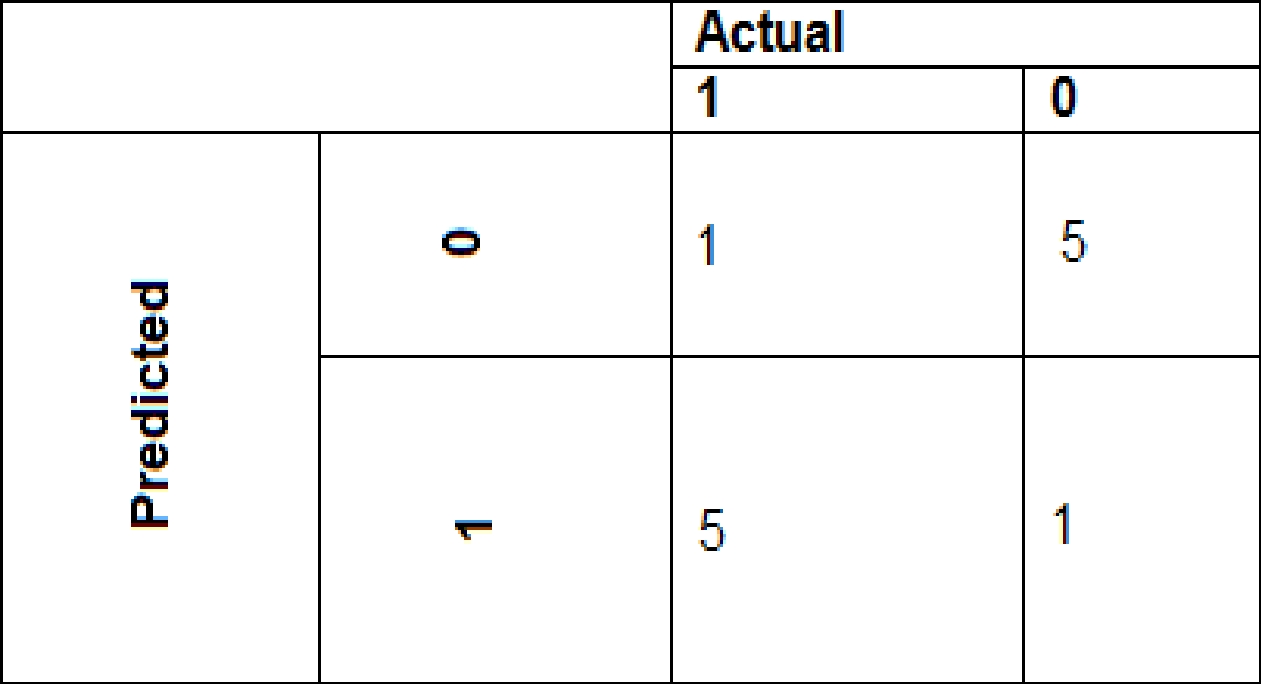

• The ad propensity model uses cost factors shown in the following diagram:

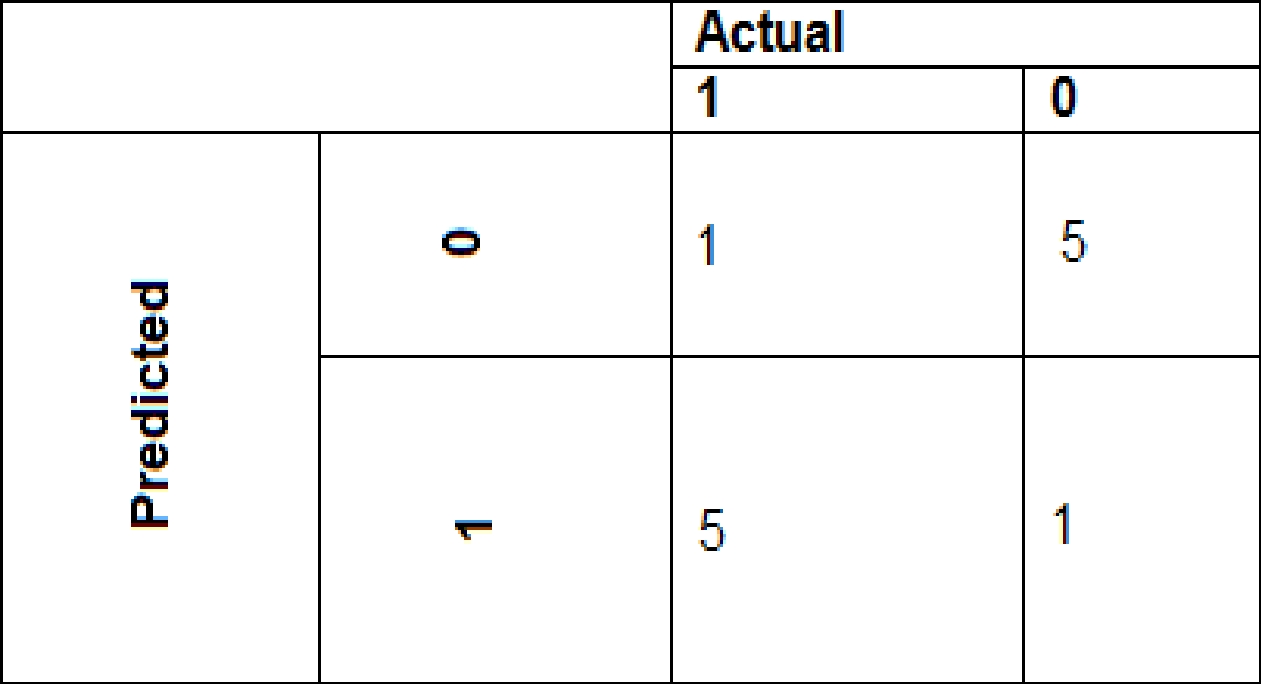

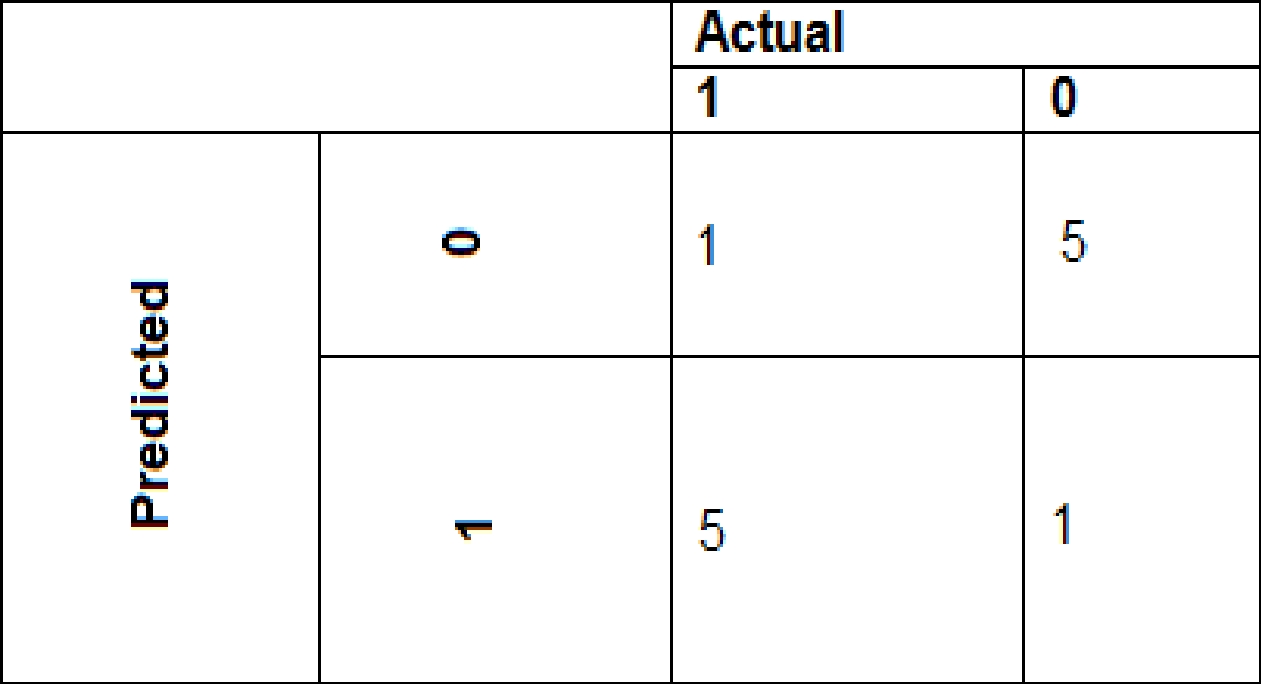

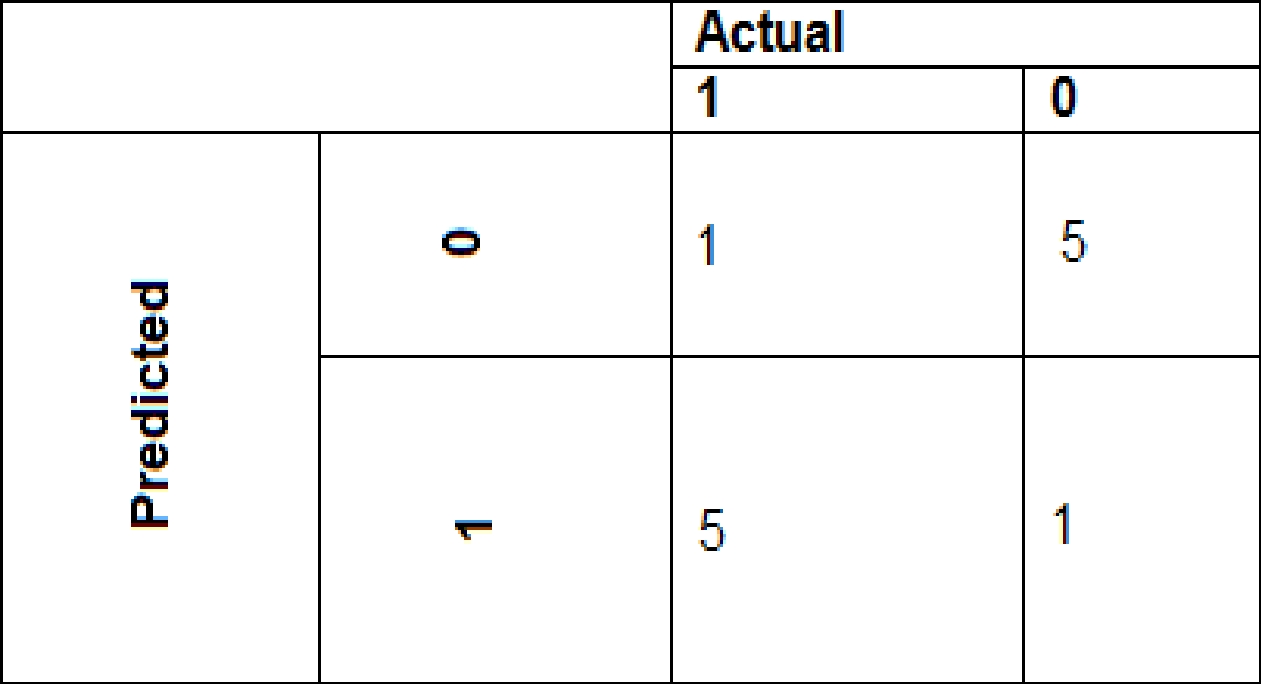

The ad propensity model uses proposed cost factors shown in the following diagram:

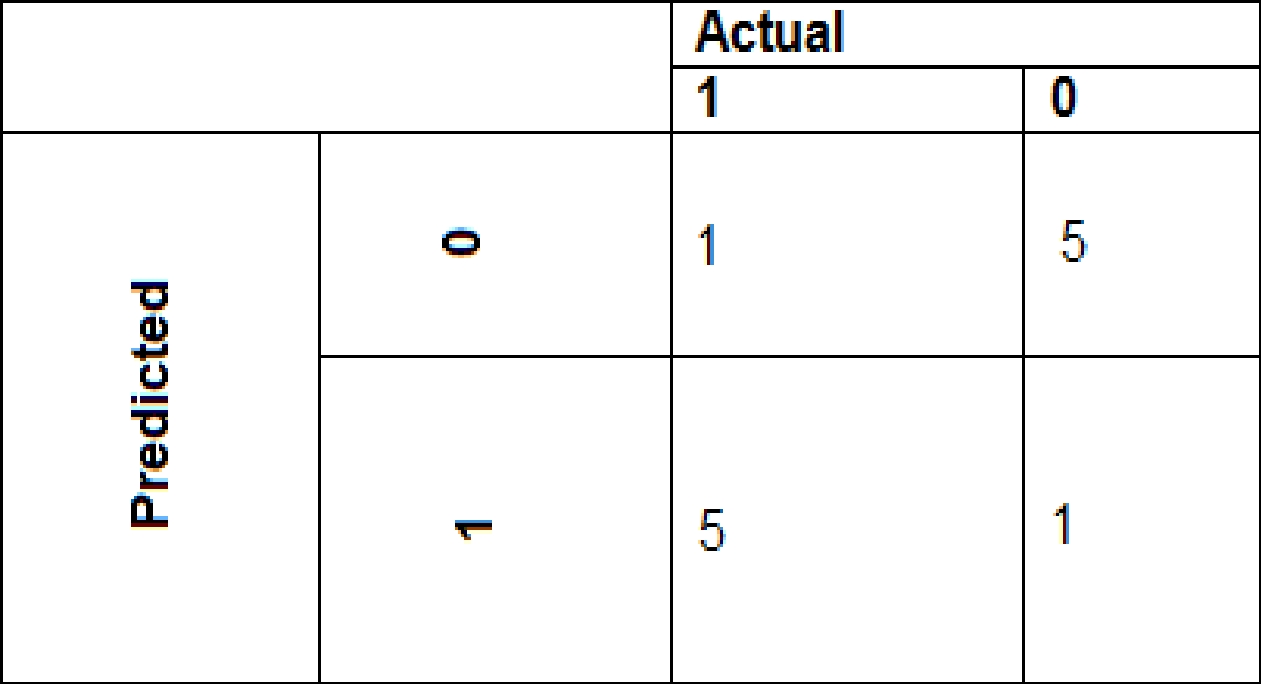

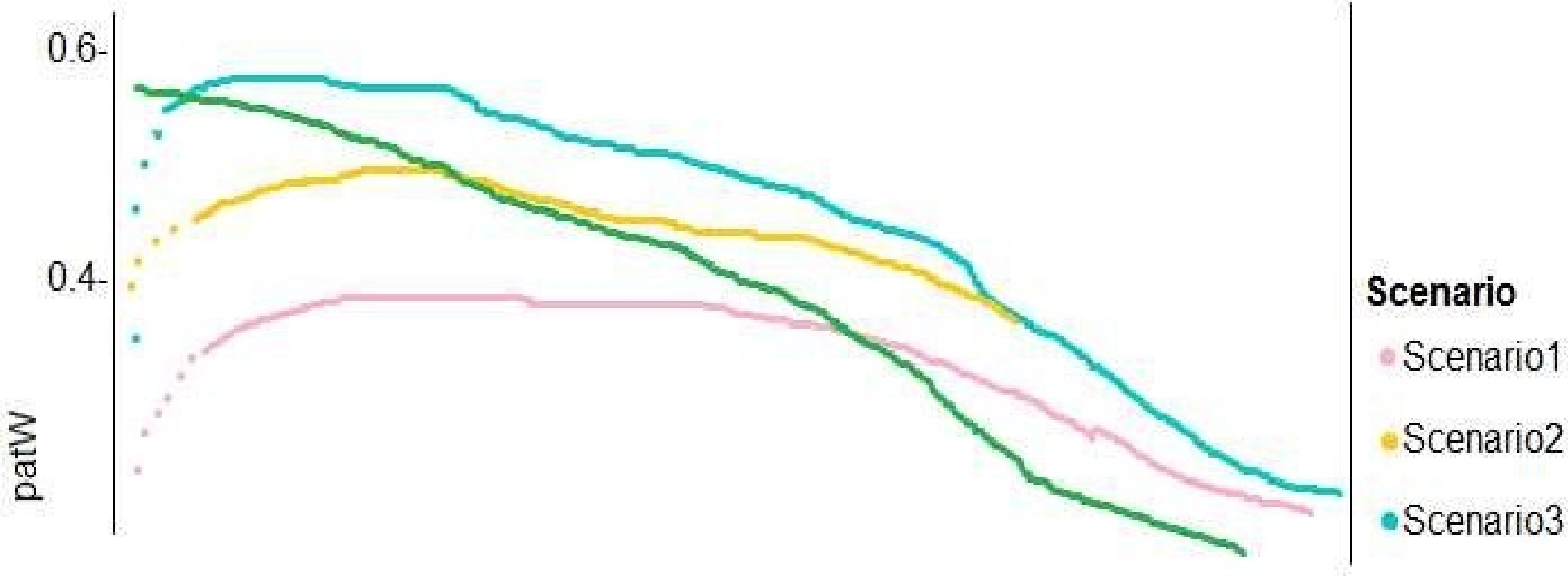

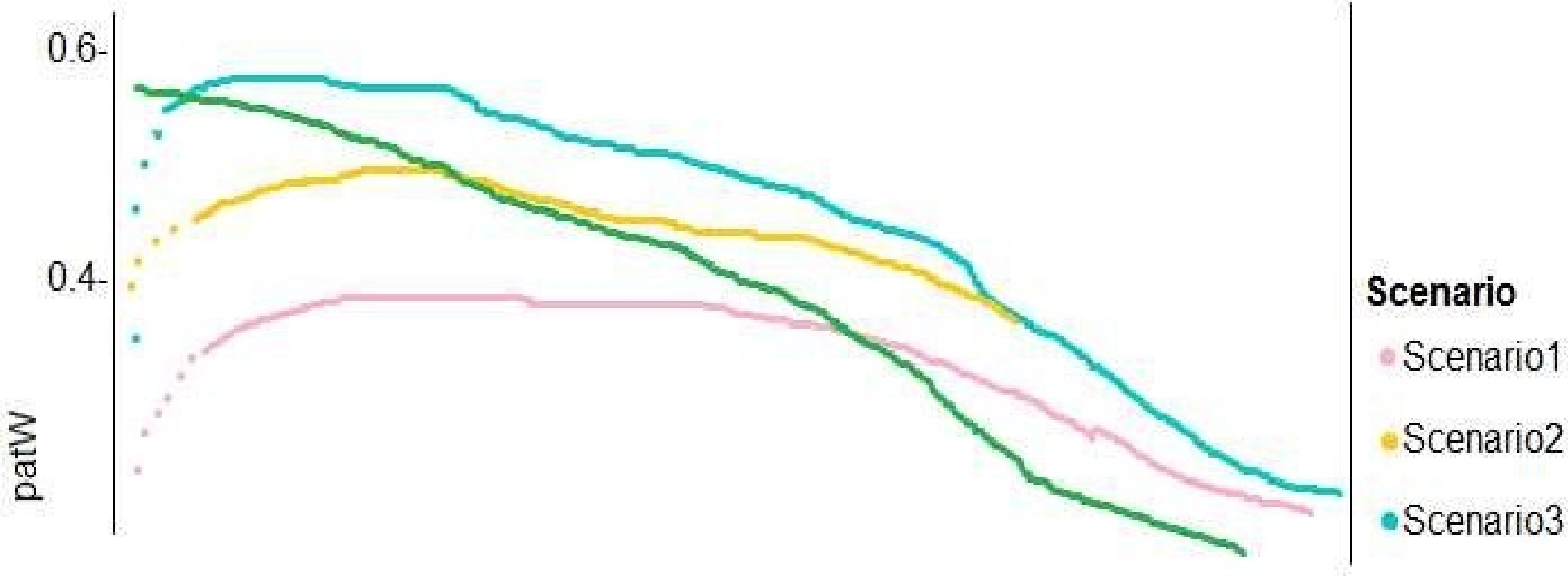

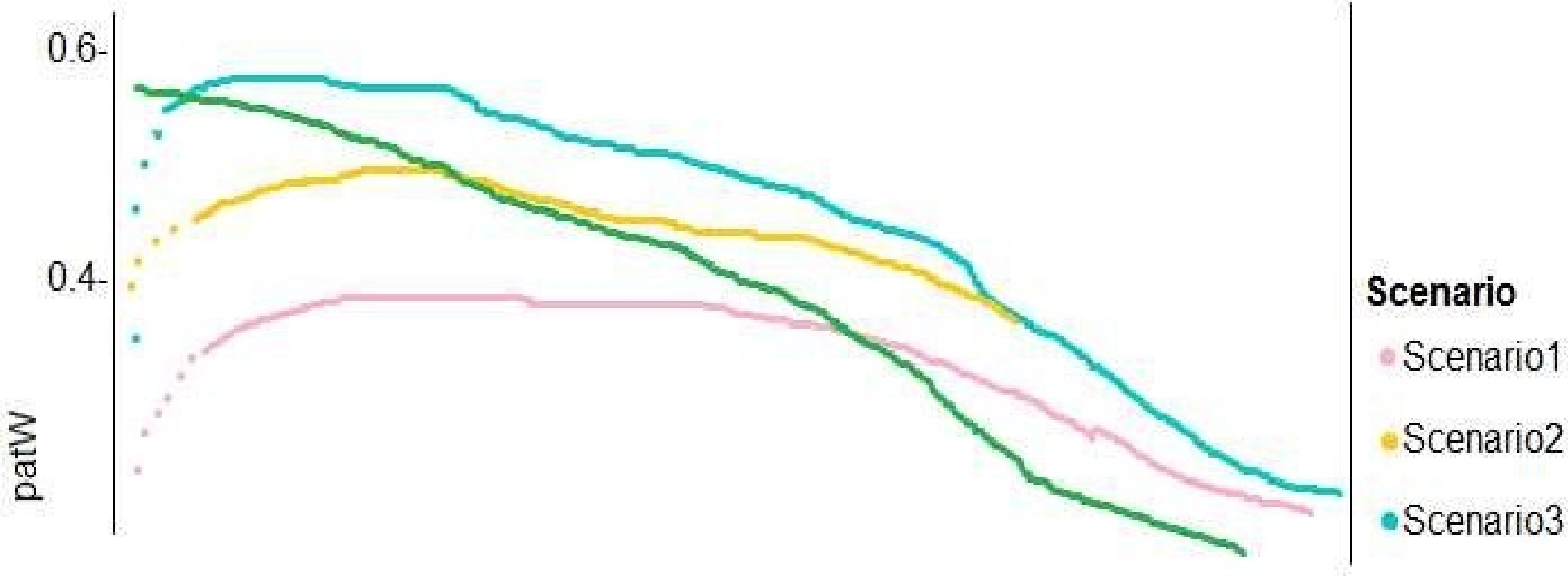

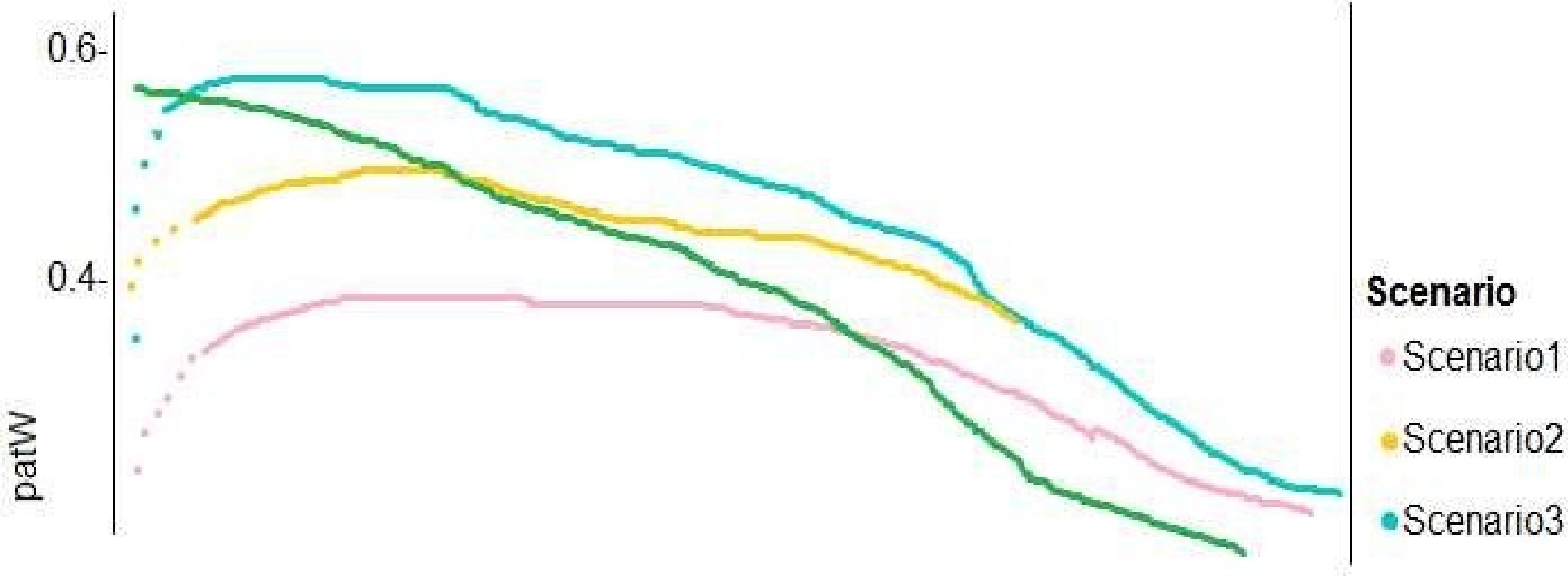

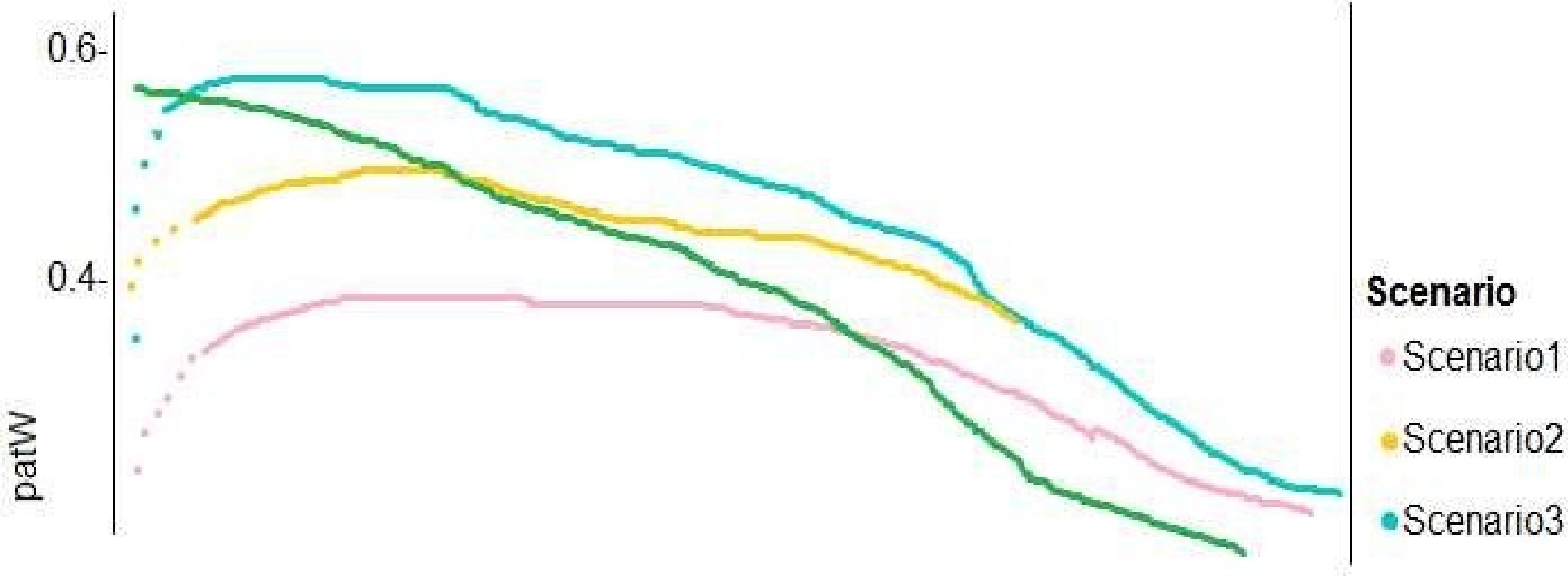

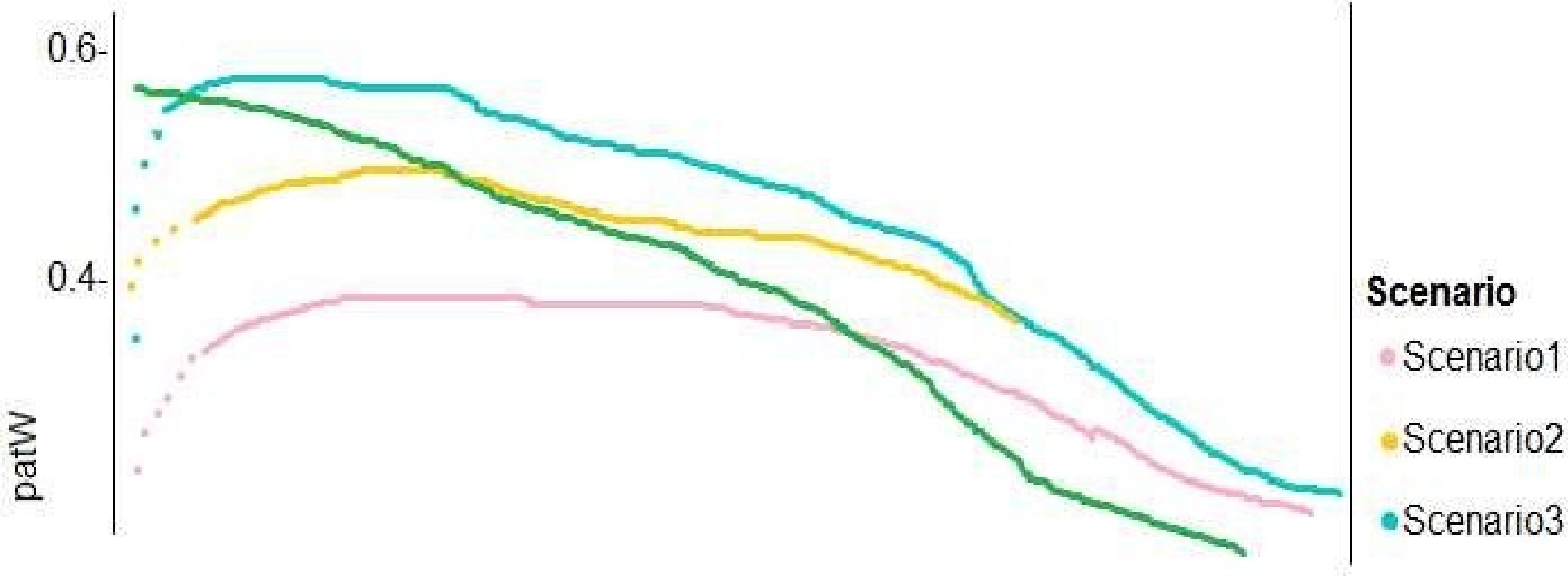

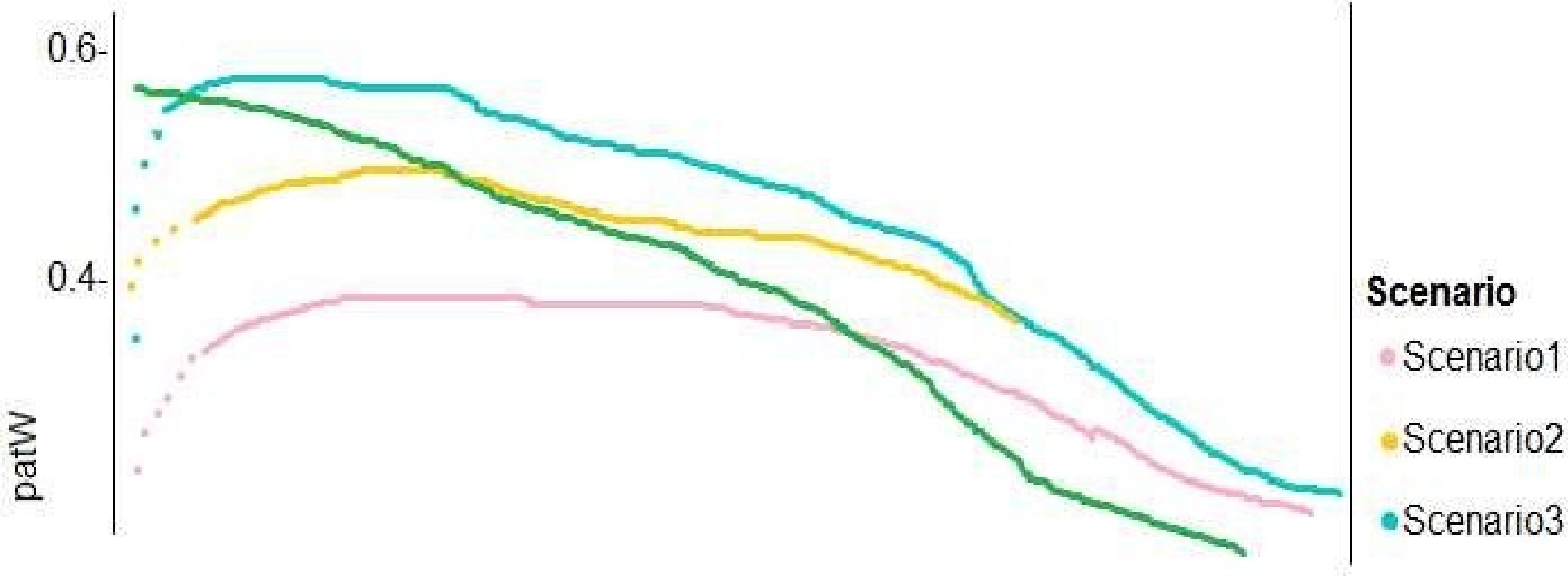

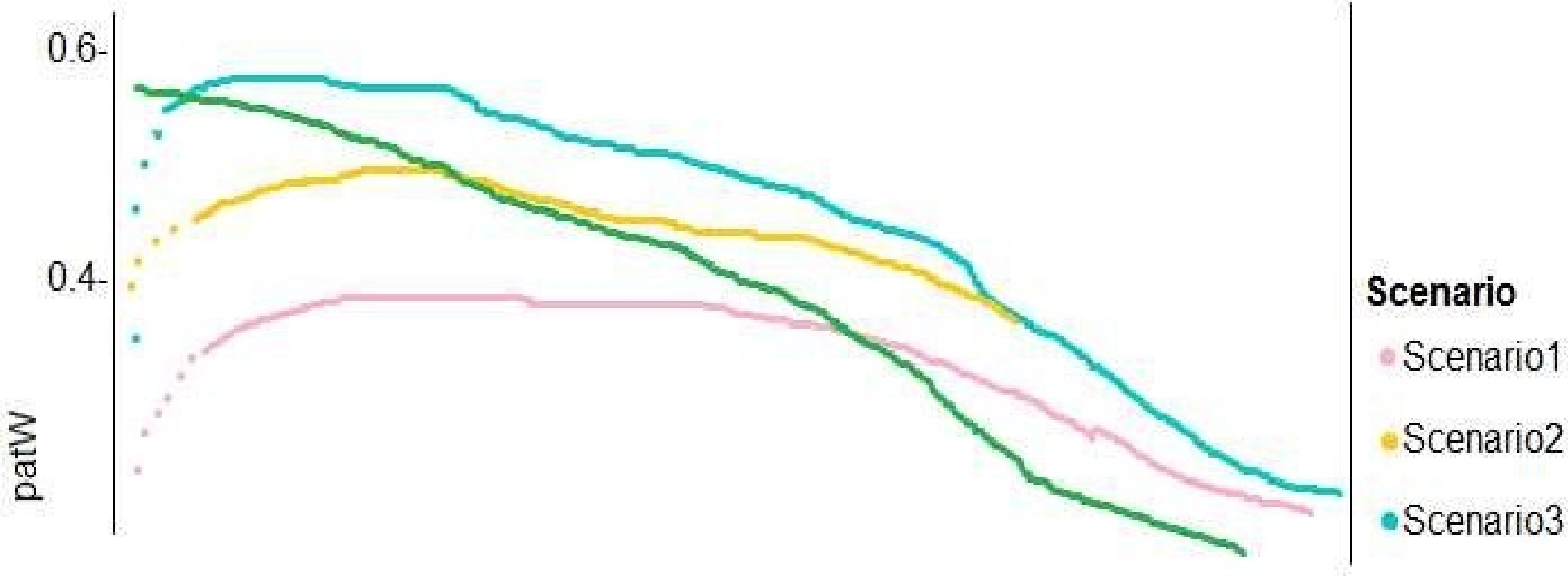

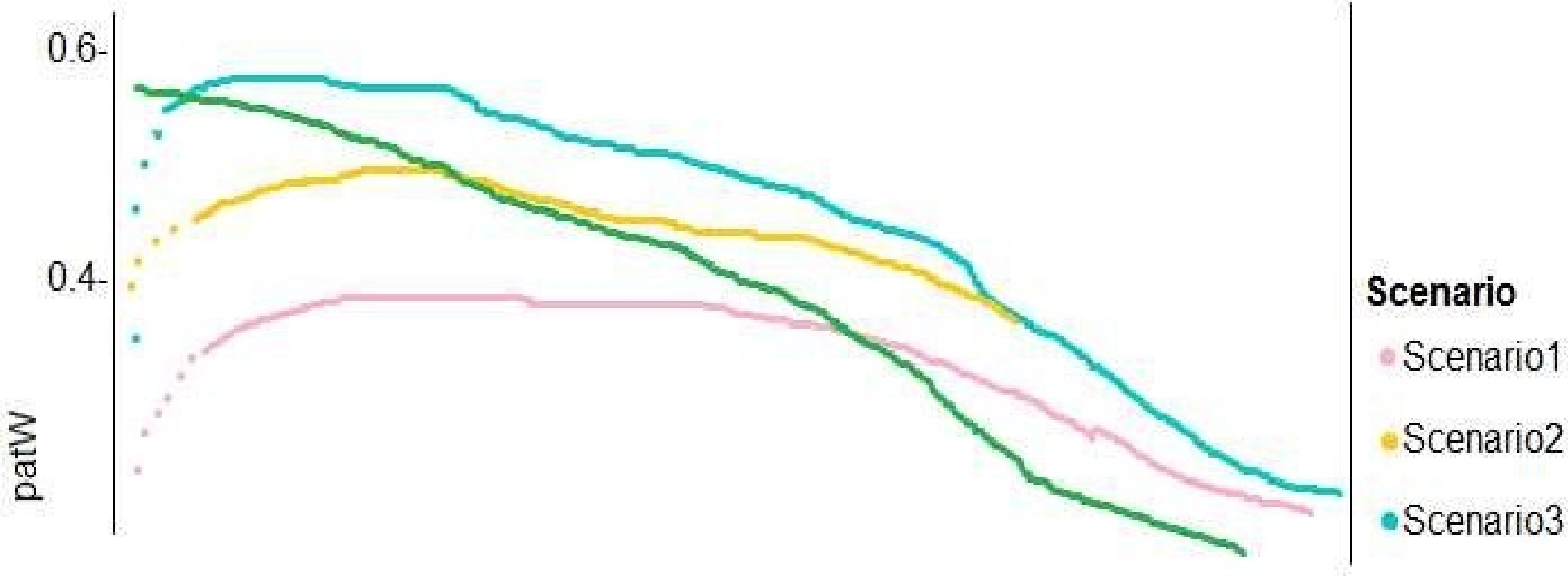

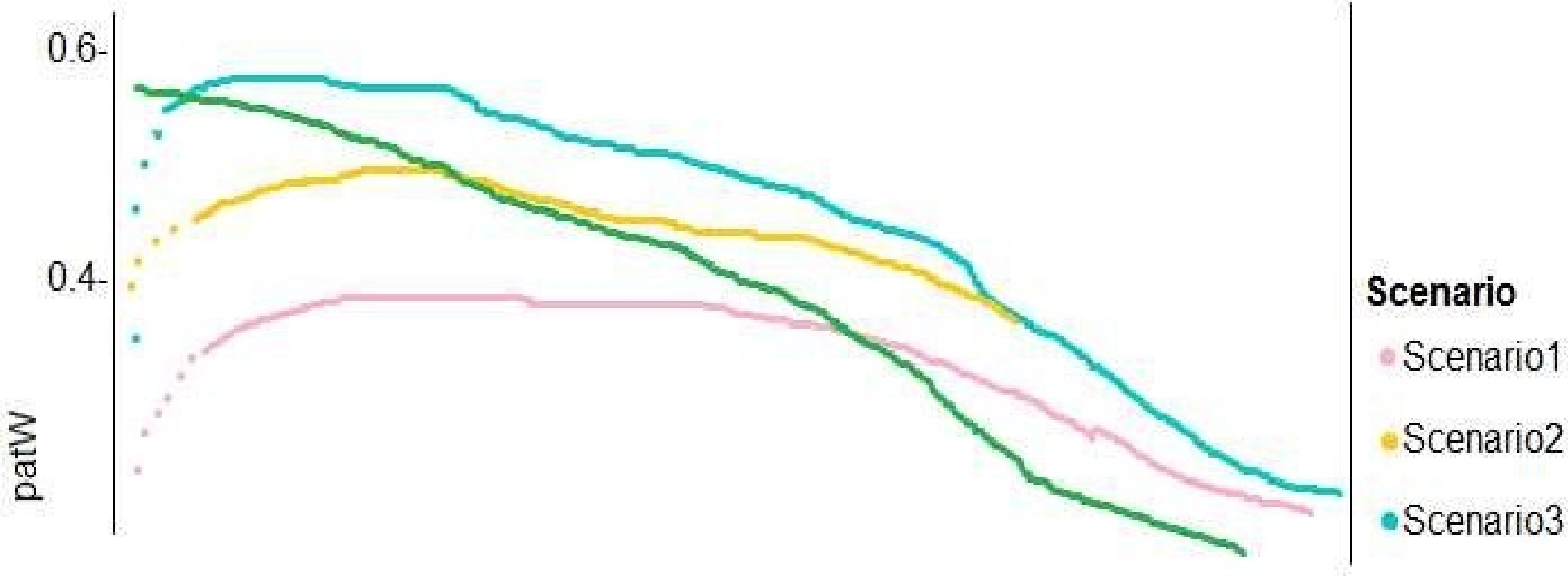

Performance curves of current and proposed cost factor scenarios are shown in the following

diagram:

Penalty detection and sentiment

Findings

• Data scientists must build an intelligent solution by using multiple machine learning models for

penalty event detection.

• Data scientists must build notebooks in a local environment using automatic feature engineering

and model building in machine learning pipelines.

• Notebooks must be deployed to retrain by using Spark instances with dynamic worker allocation

• Notebooks must execute with the same code on new Spark instances to recode only the source of

the data.

• Global penalty detection models must be trained by using dynamic runtime graph computation

during training.

• Local penalty detection models must be written by using BrainScript.

• Experiments for local crowd sentiment models must combine local penalty detection data.

• Crowd sentiment models must identify known sounds such as cheers and known catch phrases.

Individual crowd sentiment models will detect similar sounds.

• All shared features for local models are continuous variables.

• Shared features must use double precision. Subsequent layers must have aggregate running mean

and standard deviation metrics Available.

segments

During the initial weeks in production, the following was observed:

• Ad response rates declined.

• Drops were not consistent across ad styles.

• The distribution of features across training and production data are not consistent.

Analysis shows that of the 100 numeric features on user location and behavior, the 47 features that

come from location sources are being used as raw features. A suggested experiment to remedy the

bias and variance issue is to engineer 10 linearly uncorrected features.

Penalty detection and sentiment

• Initial data discovery shows a wide range of densities of target states in training data used for crowd

sentiment models.

• All penalty detection models show inference phases using a Stochastic Gradient Descent (SGD) are

running too stow.

• Audio samples show that the length of a catch phrase varies between 25%-47%, depending on

region.

• The performance of the global penalty detection models show lower variance but higher bias when

comparing training and validation sets. Before implementing any feature changes, you must confirm

the bias and variance using all training and validation cases.

Which normalization type should you use?

Quiz

Overview

You are a data scientist in a company that provides data science for professional sporting events.

Models will be global and local market data to meet the following business goals:

• Understand sentiment of mobile device users at sporting events based on audio from crowd

reactions.

• Access a user's tendency to respond to an advertisement.

• Customize styles of ads served on mobile devices.

• Use video to detect penalty events.

Current environment

Requirements

• Media used for penalty event detection will be provided by consumer devices. Media may include

images and videos captured during the sporting event and snared using social media. The images

and videos will have varying sizes and formats.

• The data available for model building comprises of seven years of sporting event media. The

sporting event media includes: recorded videos, transcripts of radio commentary, and logs from

related social media feeds feeds captured during the sporting evens.

• Crowd sentiment will include audio recordings submitted by event attendees in both mono and

stereo

Formats.

Advertisements

• Ad response models must be trained at the beginning of each event and applied during the

sporting event.

• Market segmentation nxxlels must optimize for similar ad resporr.r history.

• Sampling must guarantee mutual and collective exclusivity local and global segmentation models

that share the same features.

• Local market segmentation models will be applied before determining a user’s propensity to

respond to an advertisement.

• Data scientists must be able to detect model degradation and decay.

• Ad response models must support non linear boundaries features.

• The ad propensity model uses a cut threshold is 0.45 and retrains occur if weighted Kappa

deviates from 0.1 +/-5%.

• The ad propensity model uses cost factors shown in the following diagram:

The ad propensity model uses proposed cost factors shown in the following diagram:

Performance curves of current and proposed cost factor scenarios are shown in the following

diagram:

Penalty detection and sentiment

Findings

• Data scientists must build an intelligent solution by using multiple machine learning models for

penalty event detection.

• Data scientists must build notebooks in a local environment using automatic feature engineering

and model building in machine learning pipelines.

• Notebooks must be deployed to retrain by using Spark instances with dynamic worker allocation

• Notebooks must execute with the same code on new Spark instances to recode only the source of

the data.

• Global penalty detection models must be trained by using dynamic runtime graph computation

during training.

• Local penalty detection models must be written by using BrainScript.

• Experiments for local crowd sentiment models must combine local penalty detection data.

• Crowd sentiment models must identify known sounds such as cheers and known catch phrases.

Individual crowd sentiment models will detect similar sounds.

• All shared features for local models are continuous variables.

• Shared features must use double precision. Subsequent layers must have aggregate running mean

and standard deviation metrics Available.

segments

During the initial weeks in production, the following was observed:

• Ad response rates declined.

• Drops were not consistent across ad styles.

• The distribution of features across training and production data are not consistent.

Analysis shows that of the 100 numeric features on user location and behavior, the 47 features that

come from location sources are being used as raw features. A suggested experiment to remedy the

bias and variance issue is to engineer 10 linearly uncorrected features.

Penalty detection and sentiment

• Initial data discovery shows a wide range of densities of target states in training data used for crowd

sentiment models.

• All penalty detection models show inference phases using a Stochastic Gradient Descent (SGD) are

running too stow.

• Audio samples show that the length of a catch phrase varies between 25%-47%, depending on

region.

• The performance of the global penalty detection models show lower variance but higher bias when

comparing training and validation sets. Before implementing any feature changes, you must confirm

the bias and variance using all training and validation cases.

Quiz

Overview

You are a data scientist in a company that provides data science for professional sporting events.

Models will be global and local market data to meet the following business goals:

• Understand sentiment of mobile device users at sporting events based on audio from crowd

reactions.

• Access a user's tendency to respond to an advertisement.

• Customize styles of ads served on mobile devices.

• Use video to detect penalty events.

Current environment

Requirements

• Media used for penalty event detection will be provided by consumer devices. Media may include

images and videos captured during the sporting event and snared using social media. The images

and videos will have varying sizes and formats.

• The data available for model building comprises of seven years of sporting event media. The

sporting event media includes: recorded videos, transcripts of radio commentary, and logs from

related social media feeds feeds captured during the sporting evens.

• Crowd sentiment will include audio recordings submitted by event attendees in both mono and

stereo

Formats.

Advertisements

• Ad response models must be trained at the beginning of each event and applied during the

sporting event.

• Market segmentation nxxlels must optimize for similar ad resporr.r history.

• Sampling must guarantee mutual and collective exclusivity local and global segmentation models

that share the same features.

• Local market segmentation models will be applied before determining a user’s propensity to

respond to an advertisement.

• Data scientists must be able to detect model degradation and decay.

• Ad response models must support non linear boundaries features.

• The ad propensity model uses a cut threshold is 0.45 and retrains occur if weighted Kappa

deviates from 0.1 +/-5%.

• The ad propensity model uses cost factors shown in the following diagram:

The ad propensity model uses proposed cost factors shown in the following diagram:

Performance curves of current and proposed cost factor scenarios are shown in the following

diagram:

Penalty detection and sentiment

Findings

• Data scientists must build an intelligent solution by using multiple machine learning models for

penalty event detection.

• Data scientists must build notebooks in a local environment using automatic feature engineering

and model building in machine learning pipelines.

• Notebooks must be deployed to retrain by using Spark instances with dynamic worker allocation

• Notebooks must execute with the same code on new Spark instances to recode only the source of

the data.

• Global penalty detection models must be trained by using dynamic runtime graph computation

during training.

• Local penalty detection models must be written by using BrainScript.

• Experiments for local crowd sentiment models must combine local penalty detection data.

• Crowd sentiment models must identify known sounds such as cheers and known catch phrases.

Individual crowd sentiment models will detect similar sounds.

• All shared features for local models are continuous variables.

• Shared features must use double precision. Subsequent layers must have aggregate running mean

and standard deviation metrics Available.

segments

During the initial weeks in production, the following was observed:

• Ad response rates declined.

• Drops were not consistent across ad styles.

• The distribution of features across training and production data are not consistent.

Analysis shows that of the 100 numeric features on user location and behavior, the 47 features that

come from location sources are being used as raw features. A suggested experiment to remedy the

bias and variance issue is to engineer 10 linearly uncorrected features.

Penalty detection and sentiment

• Initial data discovery shows a wide range of densities of target states in training data used for crowd

sentiment models.

• All penalty detection models show inference phases using a Stochastic Gradient Descent (SGD) are

running too stow.

• Audio samples show that the length of a catch phrase varies between 25%-47%, depending on

region.

• The performance of the global penalty detection models show lower variance but higher bias when

comparing training and validation sets. Before implementing any feature changes, you must confirm

the bias and variance using all training and validation cases.

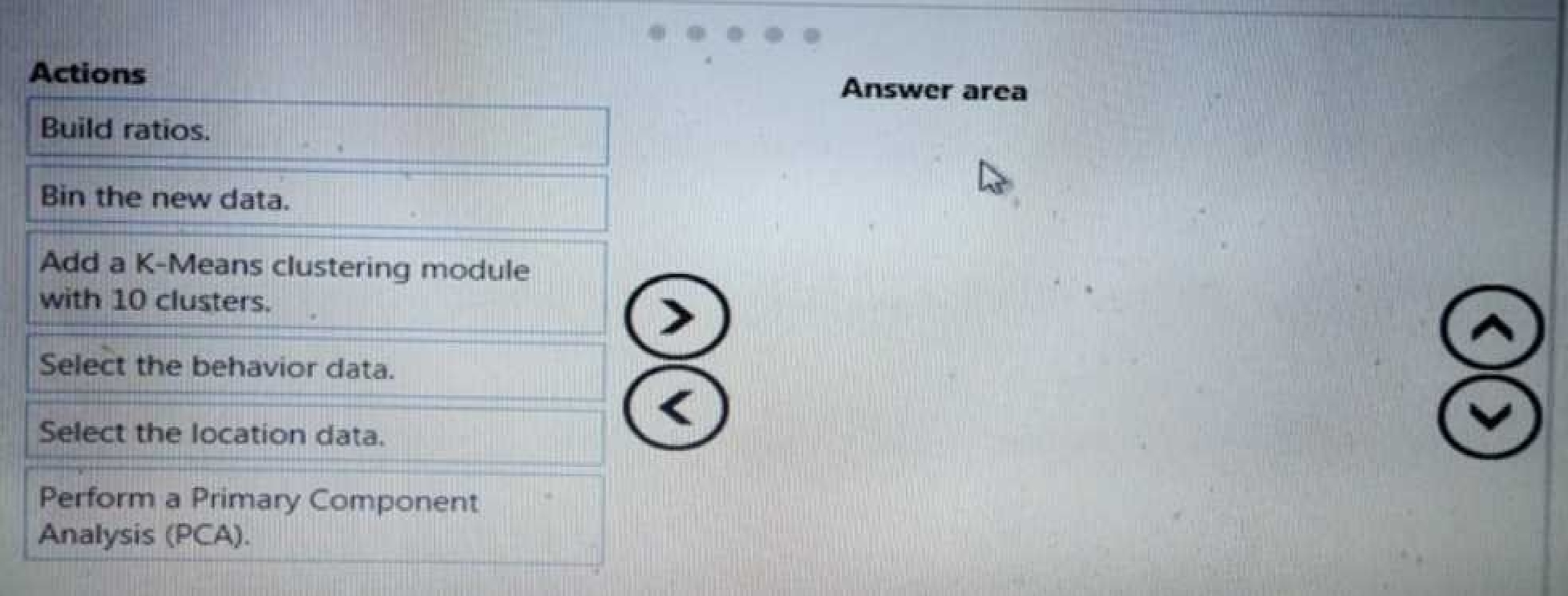

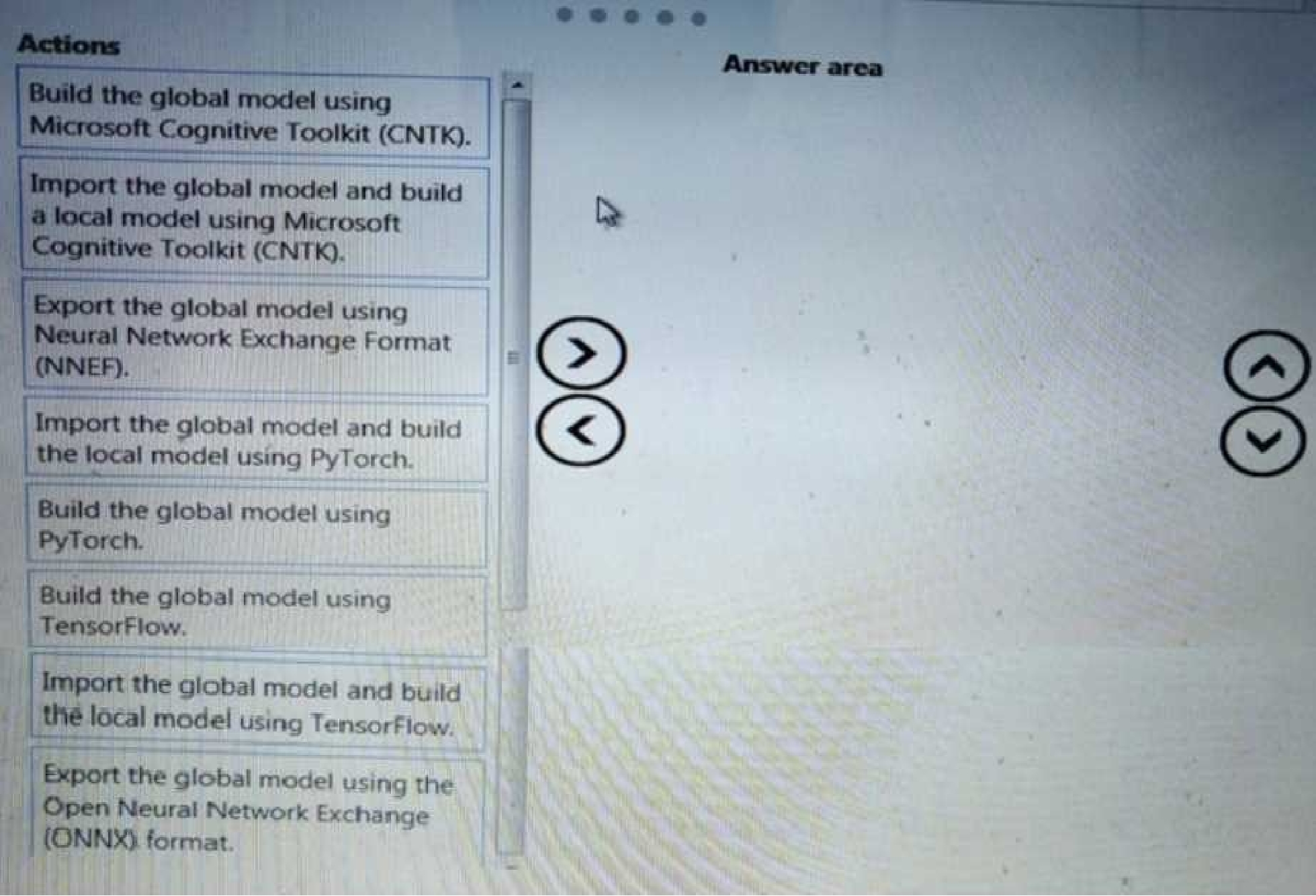

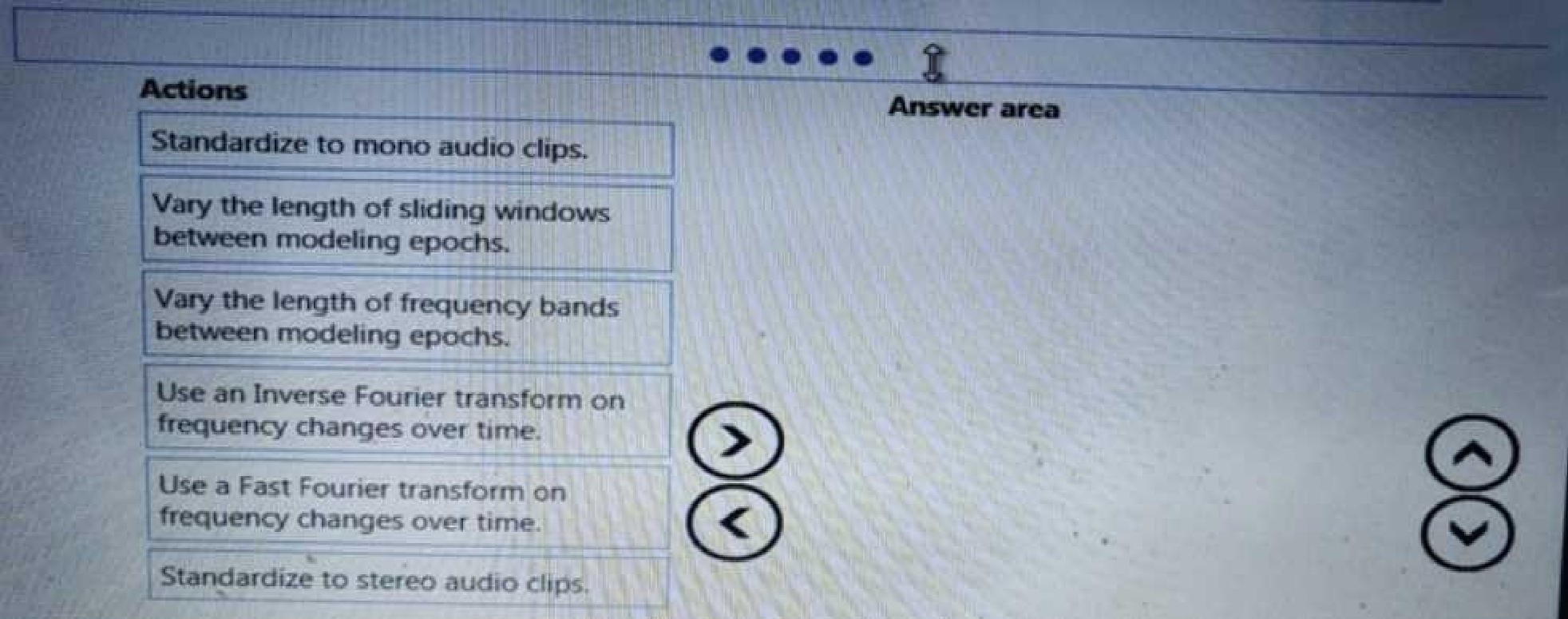

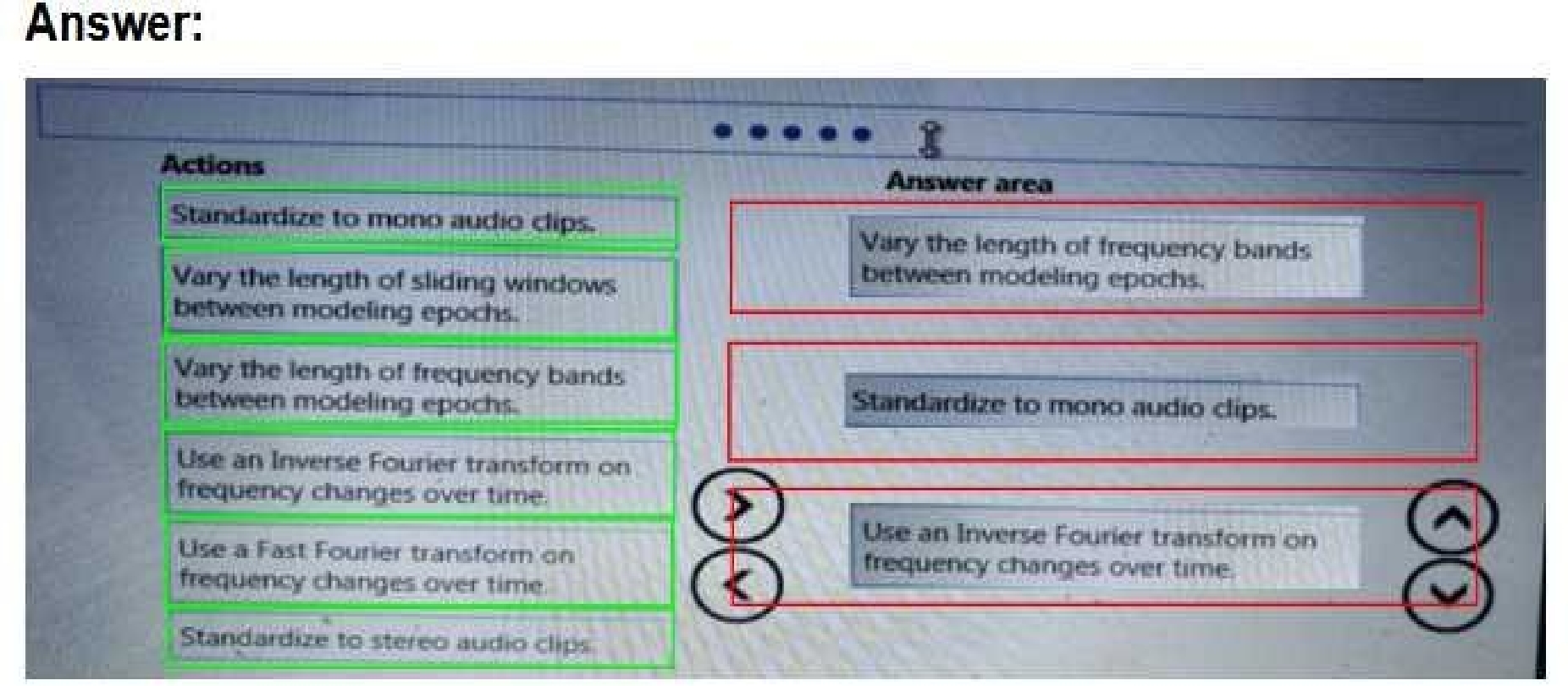

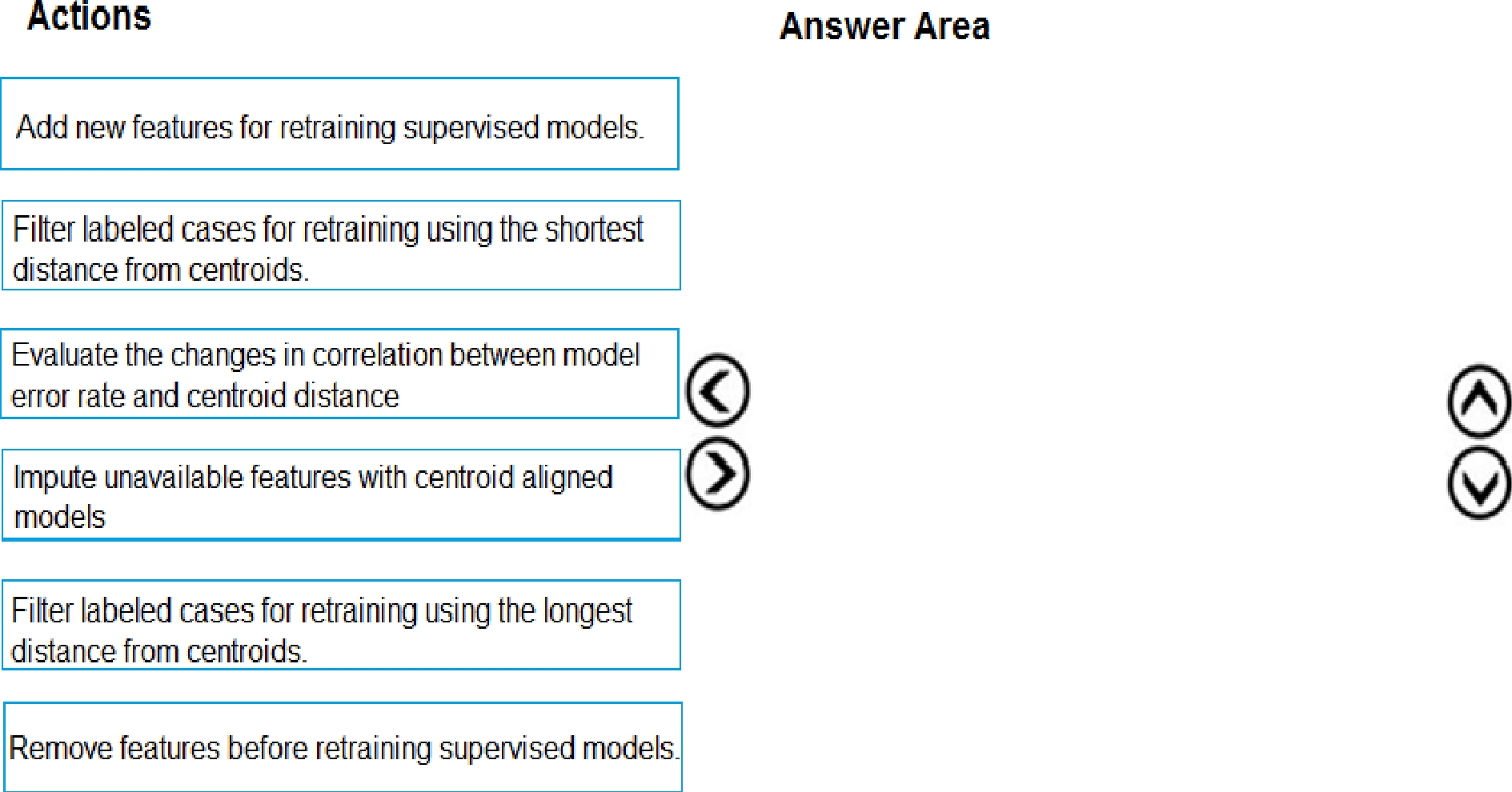

You need to modify the inputs for the global penalty event model to address the bias and variance

issue.

Which three actions should you perform in sequence? To answer, move the appropriate actions from

the list of actions to the answer area and arrange them in the correct order.

Quiz

Overview

You are a data scientist in a company that provides data science for professional sporting events.

Models will be global and local market data to meet the following business goals:

• Understand sentiment of mobile device users at sporting events based on audio from crowd

reactions.

• Access a user's tendency to respond to an advertisement.

• Customize styles of ads served on mobile devices.

• Use video to detect penalty events.

Current environment

Requirements

• Media used for penalty event detection will be provided by consumer devices. Media may include

images and videos captured during the sporting event and snared using social media. The images

and videos will have varying sizes and formats.

• The data available for model building comprises of seven years of sporting event media. The

sporting event media includes: recorded videos, transcripts of radio commentary, and logs from

related social media feeds feeds captured during the sporting evens.

• Crowd sentiment will include audio recordings submitted by event attendees in both mono and

stereo

Formats.

Advertisements

• Ad response models must be trained at the beginning of each event and applied during the

sporting event.

• Market segmentation nxxlels must optimize for similar ad resporr.r history.

• Sampling must guarantee mutual and collective exclusivity local and global segmentation models

that share the same features.

• Local market segmentation models will be applied before determining a user’s propensity to

respond to an advertisement.

• Data scientists must be able to detect model degradation and decay.

• Ad response models must support non linear boundaries features.

• The ad propensity model uses a cut threshold is 0.45 and retrains occur if weighted Kappa

deviates from 0.1 +/-5%.

• The ad propensity model uses cost factors shown in the following diagram:

The ad propensity model uses proposed cost factors shown in the following diagram:

Performance curves of current and proposed cost factor scenarios are shown in the following

diagram:

Penalty detection and sentiment

Findings

• Data scientists must build an intelligent solution by using multiple machine learning models for

penalty event detection.

• Data scientists must build notebooks in a local environment using automatic feature engineering

and model building in machine learning pipelines.

• Notebooks must be deployed to retrain by using Spark instances with dynamic worker allocation

• Notebooks must execute with the same code on new Spark instances to recode only the source of

the data.

• Global penalty detection models must be trained by using dynamic runtime graph computation

during training.

• Local penalty detection models must be written by using BrainScript.

• Experiments for local crowd sentiment models must combine local penalty detection data.

• Crowd sentiment models must identify known sounds such as cheers and known catch phrases.

Individual crowd sentiment models will detect similar sounds.

• All shared features for local models are continuous variables.

• Shared features must use double precision. Subsequent layers must have aggregate running mean

and standard deviation metrics Available.

segments

During the initial weeks in production, the following was observed:

• Ad response rates declined.

• Drops were not consistent across ad styles.

• The distribution of features across training and production data are not consistent.

Analysis shows that of the 100 numeric features on user location and behavior, the 47 features that

come from location sources are being used as raw features. A suggested experiment to remedy the

bias and variance issue is to engineer 10 linearly uncorrected features.

Penalty detection and sentiment

• Initial data discovery shows a wide range of densities of target states in training data used for crowd

sentiment models.

• All penalty detection models show inference phases using a Stochastic Gradient Descent (SGD) are

running too stow.

• Audio samples show that the length of a catch phrase varies between 25%-47%, depending on

region.

• The performance of the global penalty detection models show lower variance but higher bias when

comparing training and validation sets. Before implementing any feature changes, you must confirm

the bias and variance using all training and validation cases.

Which environment should you use?

Quiz

Overview

You are a data scientist in a company that provides data science for professional sporting events.

Models will be global and local market data to meet the following business goals:

• Understand sentiment of mobile device users at sporting events based on audio from crowd

reactions.

• Access a user's tendency to respond to an advertisement.

• Customize styles of ads served on mobile devices.

• Use video to detect penalty events.

Current environment

Requirements

• Media used for penalty event detection will be provided by consumer devices. Media may include

images and videos captured during the sporting event and snared using social media. The images

and videos will have varying sizes and formats.

• The data available for model building comprises of seven years of sporting event media. The

sporting event media includes: recorded videos, transcripts of radio commentary, and logs from

related social media feeds feeds captured during the sporting evens.

• Crowd sentiment will include audio recordings submitted by event attendees in both mono and

stereo

Formats.

Advertisements

• Ad response models must be trained at the beginning of each event and applied during the

sporting event.

• Market segmentation nxxlels must optimize for similar ad resporr.r history.

• Sampling must guarantee mutual and collective exclusivity local and global segmentation models

that share the same features.

• Local market segmentation models will be applied before determining a user’s propensity to

respond to an advertisement.

• Data scientists must be able to detect model degradation and decay.

• Ad response models must support non linear boundaries features.

• The ad propensity model uses a cut threshold is 0.45 and retrains occur if weighted Kappa

deviates from 0.1 +/-5%.

• The ad propensity model uses cost factors shown in the following diagram:

The ad propensity model uses proposed cost factors shown in the following diagram:

Performance curves of current and proposed cost factor scenarios are shown in the following

diagram:

Penalty detection and sentiment

Findings

• Data scientists must build an intelligent solution by using multiple machine learning models for

penalty event detection.

• Data scientists must build notebooks in a local environment using automatic feature engineering

and model building in machine learning pipelines.

• Notebooks must be deployed to retrain by using Spark instances with dynamic worker allocation

• Notebooks must execute with the same code on new Spark instances to recode only the source of

the data.

• Global penalty detection models must be trained by using dynamic runtime graph computation

during training.

• Local penalty detection models must be written by using BrainScript.

• Experiments for local crowd sentiment models must combine local penalty detection data.

• Crowd sentiment models must identify known sounds such as cheers and known catch phrases.

Individual crowd sentiment models will detect similar sounds.

• All shared features for local models are continuous variables.

• Shared features must use double precision. Subsequent layers must have aggregate running mean

and standard deviation metrics Available.

segments

During the initial weeks in production, the following was observed:

• Ad response rates declined.

• Drops were not consistent across ad styles.

• The distribution of features across training and production data are not consistent.

Analysis shows that of the 100 numeric features on user location and behavior, the 47 features that

come from location sources are being used as raw features. A suggested experiment to remedy the

bias and variance issue is to engineer 10 linearly uncorrected features.

Penalty detection and sentiment

• Initial data discovery shows a wide range of densities of target states in training data used for crowd

sentiment models.

• All penalty detection models show inference phases using a Stochastic Gradient Descent (SGD) are

running too stow.

• Audio samples show that the length of a catch phrase varies between 25%-47%, depending on

region.

• The performance of the global penalty detection models show lower variance but higher bias when

comparing training and validation sets. Before implementing any feature changes, you must confirm

the bias and variance using all training and validation cases.

You need to define a process for penalty event detection.

Which three actions should you perform in sequence? To answer, move the appropriate actions from

the list of actions to the answer area and arrange them in the correct order.

Quiz

Overview

You are a data scientist in a company that provides data science for professional sporting events.

Models will be global and local market data to meet the following business goals:

• Understand sentiment of mobile device users at sporting events based on audio from crowd

reactions.

• Access a user's tendency to respond to an advertisement.

• Customize styles of ads served on mobile devices.

• Use video to detect penalty events.

Current environment

Requirements

• Media used for penalty event detection will be provided by consumer devices. Media may include

images and videos captured during the sporting event and snared using social media. The images

and videos will have varying sizes and formats.

• The data available for model building comprises of seven years of sporting event media. The

sporting event media includes: recorded videos, transcripts of radio commentary, and logs from

related social media feeds feeds captured during the sporting evens.

• Crowd sentiment will include audio recordings submitted by event attendees in both mono and

stereo

Formats.

Advertisements

• Ad response models must be trained at the beginning of each event and applied during the

sporting event.

• Market segmentation nxxlels must optimize for similar ad resporr.r history.

• Sampling must guarantee mutual and collective exclusivity local and global segmentation models

that share the same features.

• Local market segmentation models will be applied before determining a user’s propensity to

respond to an advertisement.

• Data scientists must be able to detect model degradation and decay.

• Ad response models must support non linear boundaries features.

• The ad propensity model uses a cut threshold is 0.45 and retrains occur if weighted Kappa

deviates from 0.1 +/-5%.

• The ad propensity model uses cost factors shown in the following diagram:

The ad propensity model uses proposed cost factors shown in the following diagram:

Performance curves of current and proposed cost factor scenarios are shown in the following

diagram:

Penalty detection and sentiment

Findings

• Data scientists must build an intelligent solution by using multiple machine learning models for

penalty event detection.

• Data scientists must build notebooks in a local environment using automatic feature engineering

and model building in machine learning pipelines.

• Notebooks must be deployed to retrain by using Spark instances with dynamic worker allocation

• Notebooks must execute with the same code on new Spark instances to recode only the source of

the data.

• Global penalty detection models must be trained by using dynamic runtime graph computation

during training.

• Local penalty detection models must be written by using BrainScript.

• Experiments for local crowd sentiment models must combine local penalty detection data.

• Crowd sentiment models must identify known sounds such as cheers and known catch phrases.

Individual crowd sentiment models will detect similar sounds.

• All shared features for local models are continuous variables.

• Shared features must use double precision. Subsequent layers must have aggregate running mean

and standard deviation metrics Available.

segments

During the initial weeks in production, the following was observed:

• Ad response rates declined.

• Drops were not consistent across ad styles.

• The distribution of features across training and production data are not consistent.

Analysis shows that of the 100 numeric features on user location and behavior, the 47 features that

come from location sources are being used as raw features. A suggested experiment to remedy the

bias and variance issue is to engineer 10 linearly uncorrected features.

Penalty detection and sentiment

• Initial data discovery shows a wide range of densities of target states in training data used for crowd

sentiment models.

• All penalty detection models show inference phases using a Stochastic Gradient Descent (SGD) are

running too stow.

• Audio samples show that the length of a catch phrase varies between 25%-47%, depending on

region.

• The performance of the global penalty detection models show lower variance but higher bias when

comparing training and validation sets. Before implementing any feature changes, you must confirm

the bias and variance using all training and validation cases.

You need to define a process for penalty event detection.

Which three actions should you perform in sequence? To answer, move the appropriate actions from

the list of actions to the answer area and arrange them in the correct order.

Quiz

Overview

You are a data scientist in a company that provides data science for professional sporting events.

Models will be global and local market data to meet the following business goals:

• Understand sentiment of mobile device users at sporting events based on audio from crowd

reactions.

• Access a user's tendency to respond to an advertisement.

• Customize styles of ads served on mobile devices.

• Use video to detect penalty events.

Current environment

Requirements

• Media used for penalty event detection will be provided by consumer devices. Media may include

images and videos captured during the sporting event and snared using social media. The images

and videos will have varying sizes and formats.

• The data available for model building comprises of seven years of sporting event media. The

sporting event media includes: recorded videos, transcripts of radio commentary, and logs from

related social media feeds feeds captured during the sporting evens.

• Crowd sentiment will include audio recordings submitted by event attendees in both mono and

stereo

Formats.

Advertisements

• Ad response models must be trained at the beginning of each event and applied during the

sporting event.

• Market segmentation nxxlels must optimize for similar ad resporr.r history.

• Sampling must guarantee mutual and collective exclusivity local and global segmentation models

that share the same features.

• Local market segmentation models will be applied before determining a user’s propensity to

respond to an advertisement.

• Data scientists must be able to detect model degradation and decay.

• Ad response models must support non linear boundaries features.

• The ad propensity model uses a cut threshold is 0.45 and retrains occur if weighted Kappa

deviates from 0.1 +/-5%.

• The ad propensity model uses cost factors shown in the following diagram:

The ad propensity model uses proposed cost factors shown in the following diagram:

Performance curves of current and proposed cost factor scenarios are shown in the following

diagram:

Penalty detection and sentiment

Findings

• Data scientists must build an intelligent solution by using multiple machine learning models for

penalty event detection.

• Data scientists must build notebooks in a local environment using automatic feature engineering

and model building in machine learning pipelines.

• Notebooks must be deployed to retrain by using Spark instances with dynamic worker allocation

• Notebooks must execute with the same code on new Spark instances to recode only the source of

the data.

• Global penalty detection models must be trained by using dynamic runtime graph computation

during training.

• Local penalty detection models must be written by using BrainScript.

• Experiments for local crowd sentiment models must combine local penalty detection data.

• Crowd sentiment models must identify known sounds such as cheers and known catch phrases.

Individual crowd sentiment models will detect similar sounds.

• All shared features for local models are continuous variables.

• Shared features must use double precision. Subsequent layers must have aggregate running mean

and standard deviation metrics Available.

segments

During the initial weeks in production, the following was observed:

• Ad response rates declined.

• Drops were not consistent across ad styles.

• The distribution of features across training and production data are not consistent.

Analysis shows that of the 100 numeric features on user location and behavior, the 47 features that

come from location sources are being used as raw features. A suggested experiment to remedy the

bias and variance issue is to engineer 10 linearly uncorrected features.

Penalty detection and sentiment

• Initial data discovery shows a wide range of densities of target states in training data used for crowd

sentiment models.

• All penalty detection models show inference phases using a Stochastic Gradient Descent (SGD) are

running too stow.

• Audio samples show that the length of a catch phrase varies between 25%-47%, depending on

region.

• The performance of the global penalty detection models show lower variance but higher bias when

comparing training and validation sets. Before implementing any feature changes, you must confirm

the bias and variance using all training and validation cases.

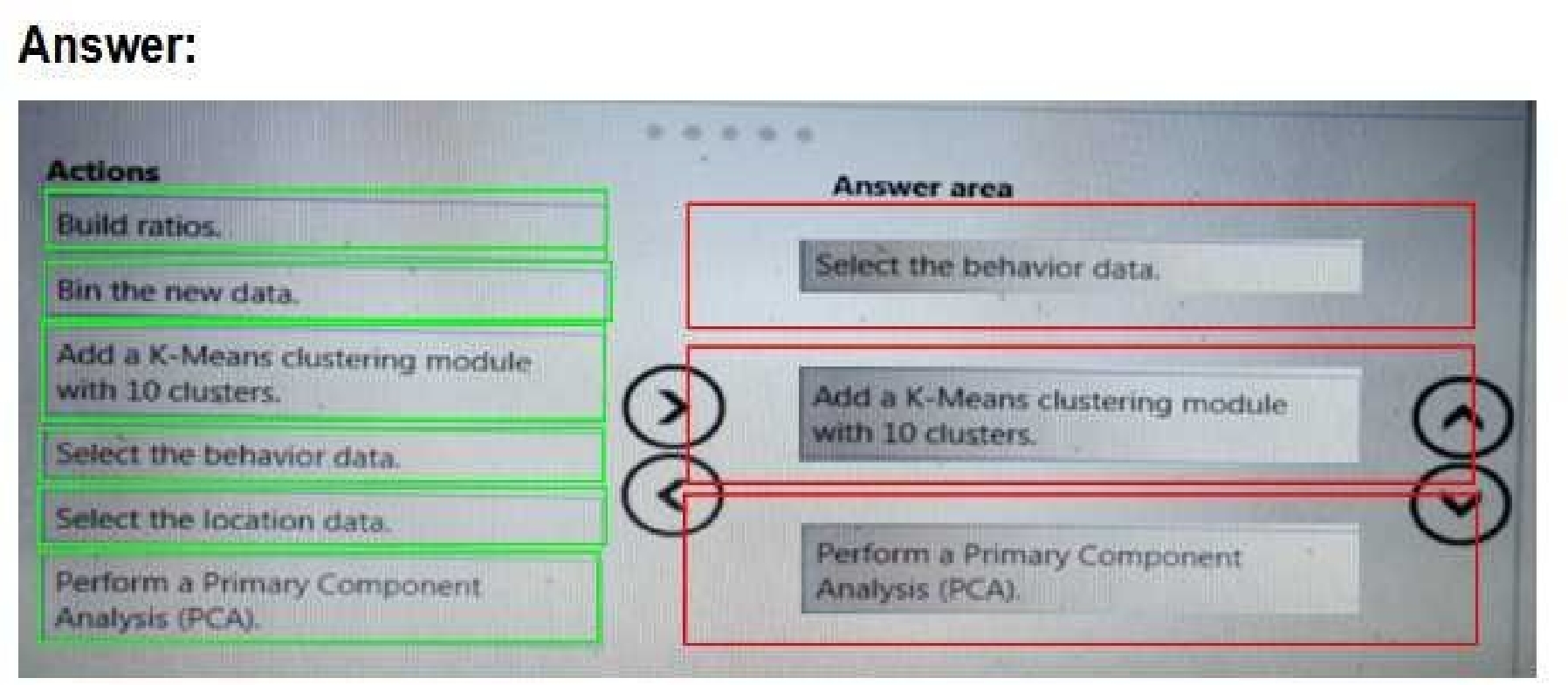

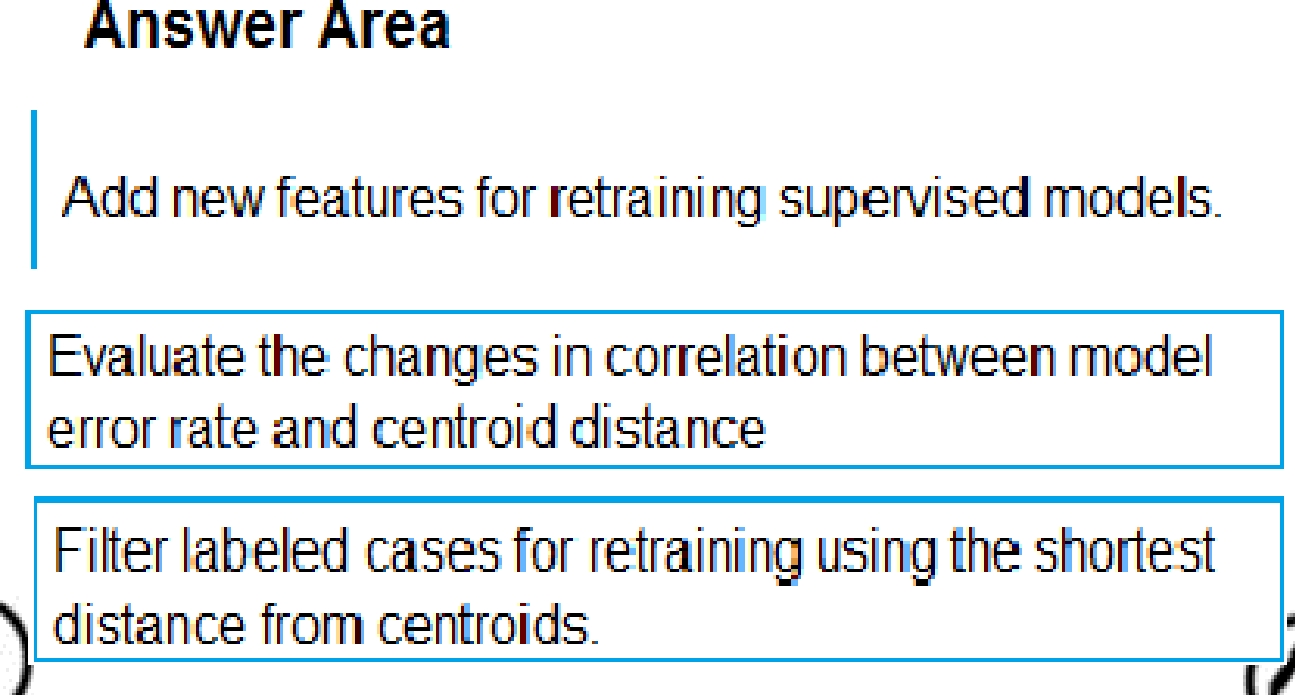

You need to define an evaluation strategy for the crowd sentiment models.

Which three actions should you perform in sequence? To answer, move the appropriate actions from

the list of actions to the answer area and arrange them in the correct order.

Scenario:

Experiments for local crowd sentiment models must combine local penalty detection data.

Crowd sentiment models must identify known sounds such as cheers and known catch phrases.

Individual crowd sentiment models will detect similar sounds.

Note: Evaluate the changed in correlation between model error rate and centroid distance

In machine learning, a nearest centroid classifier or nearest prototype classifier is a classification

model that assigns to observations the label of the class of training samples whose mean (centroid)

is closest to the observation.

Reference:

[https://en.wikipedia.org/wiki/Nearest_centroid_classifier](https://en.wikipedia.org/wiki/Nearest_centroid_classifier)

[https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/sweep-](https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/sweep-)

clustering

Quiz

Overview

You are a data scientist in a company that provides data science for professional sporting events.

Models will be global and local market data to meet the following business goals:

• Understand sentiment of mobile device users at sporting events based on audio from crowd

reactions.

• Access a user's tendency to respond to an advertisement.

• Customize styles of ads served on mobile devices.

• Use video to detect penalty events.

Current environment

Requirements

• Media used for penalty event detection will be provided by consumer devices. Media may include

images and videos captured during the sporting event and snared using social media. The images

and videos will have varying sizes and formats.

• The data available for model building comprises of seven years of sporting event media. The

sporting event media includes: recorded videos, transcripts of radio commentary, and logs from

related social media feeds feeds captured during the sporting evens.

• Crowd sentiment will include audio recordings submitted by event attendees in both mono and

stereo

Formats.

Advertisements

• Ad response models must be trained at the beginning of each event and applied during the

sporting event.

• Market segmentation nxxlels must optimize for similar ad resporr.r history.

• Sampling must guarantee mutual and collective exclusivity local and global segmentation models

that share the same features.

• Local market segmentation models will be applied before determining a user’s propensity to

respond to an advertisement.

• Data scientists must be able to detect model degradation and decay.

• Ad response models must support non linear boundaries features.

• The ad propensity model uses a cut threshold is 0.45 and retrains occur if weighted Kappa

deviates from 0.1 +/-5%.

• The ad propensity model uses cost factors shown in the following diagram:

The ad propensity model uses proposed cost factors shown in the following diagram:

Performance curves of current and proposed cost factor scenarios are shown in the following

diagram:

Penalty detection and sentiment

Findings

• Data scientists must build an intelligent solution by using multiple machine learning models for

penalty event detection.

• Data scientists must build notebooks in a local environment using automatic feature engineering

and model building in machine learning pipelines.

• Notebooks must be deployed to retrain by using Spark instances with dynamic worker allocation

• Notebooks must execute with the same code on new Spark instances to recode only the source of

the data.

• Global penalty detection models must be trained by using dynamic runtime graph computation

during training.

• Local penalty detection models must be written by using BrainScript.

• Experiments for local crowd sentiment models must combine local penalty detection data.

• Crowd sentiment models must identify known sounds such as cheers and known catch phrases.

Individual crowd sentiment models will detect similar sounds.

• All shared features for local models are continuous variables.

• Shared features must use double precision. Subsequent layers must have aggregate running mean

and standard deviation metrics Available.

segments

During the initial weeks in production, the following was observed:

• Ad response rates declined.

• Drops were not consistent across ad styles.

• The distribution of features across training and production data are not consistent.

Analysis shows that of the 100 numeric features on user location and behavior, the 47 features that

come from location sources are being used as raw features. A suggested experiment to remedy the

bias and variance issue is to engineer 10 linearly uncorrected features.

Penalty detection and sentiment

• Initial data discovery shows a wide range of densities of target states in training data used for crowd

sentiment models.

• All penalty detection models show inference phases using a Stochastic Gradient Descent (SGD) are

running too stow.

• Audio samples show that the length of a catch phrase varies between 25%-47%, depending on

region.

• The performance of the global penalty detection models show lower variance but higher bias when

comparing training and validation sets. Before implementing any feature changes, you must confirm

the bias and variance using all training and validation cases.

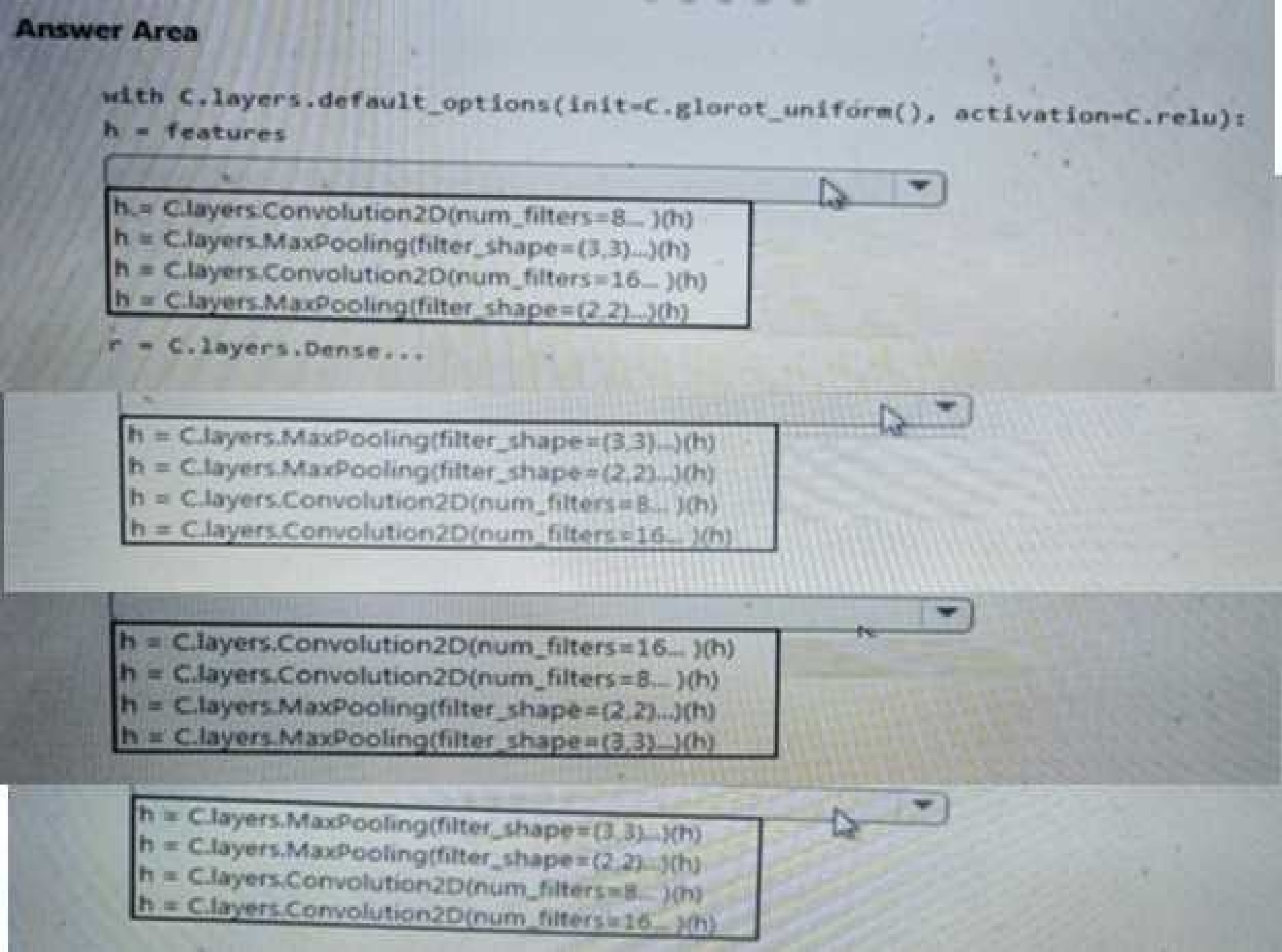

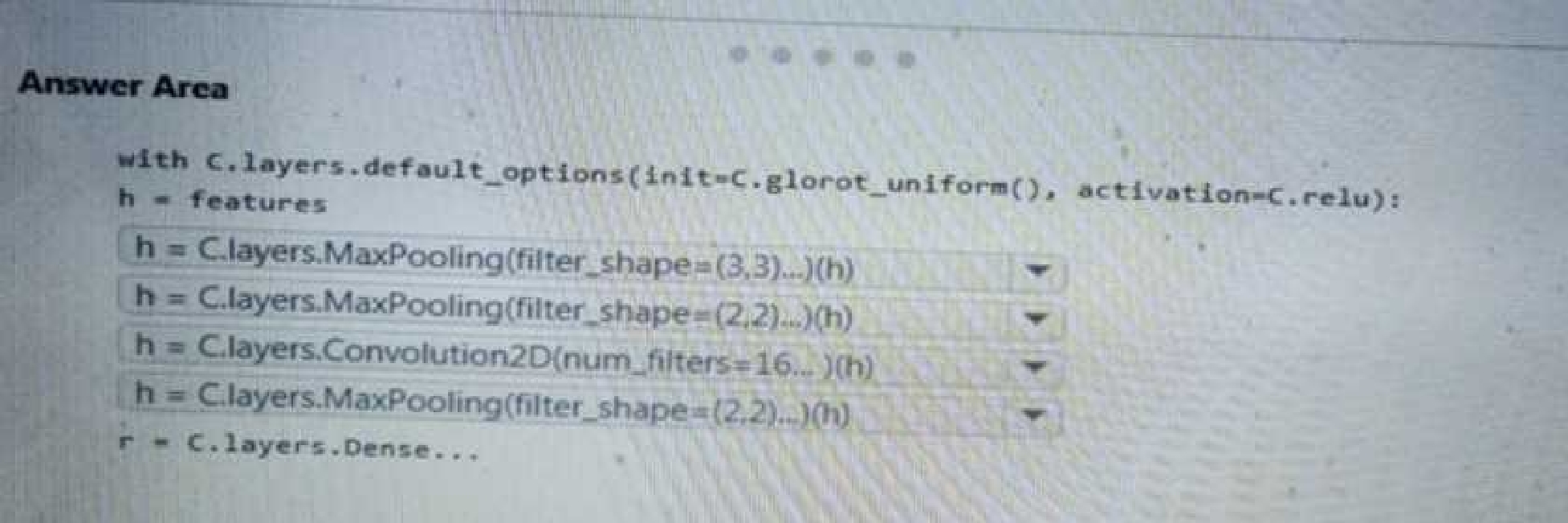

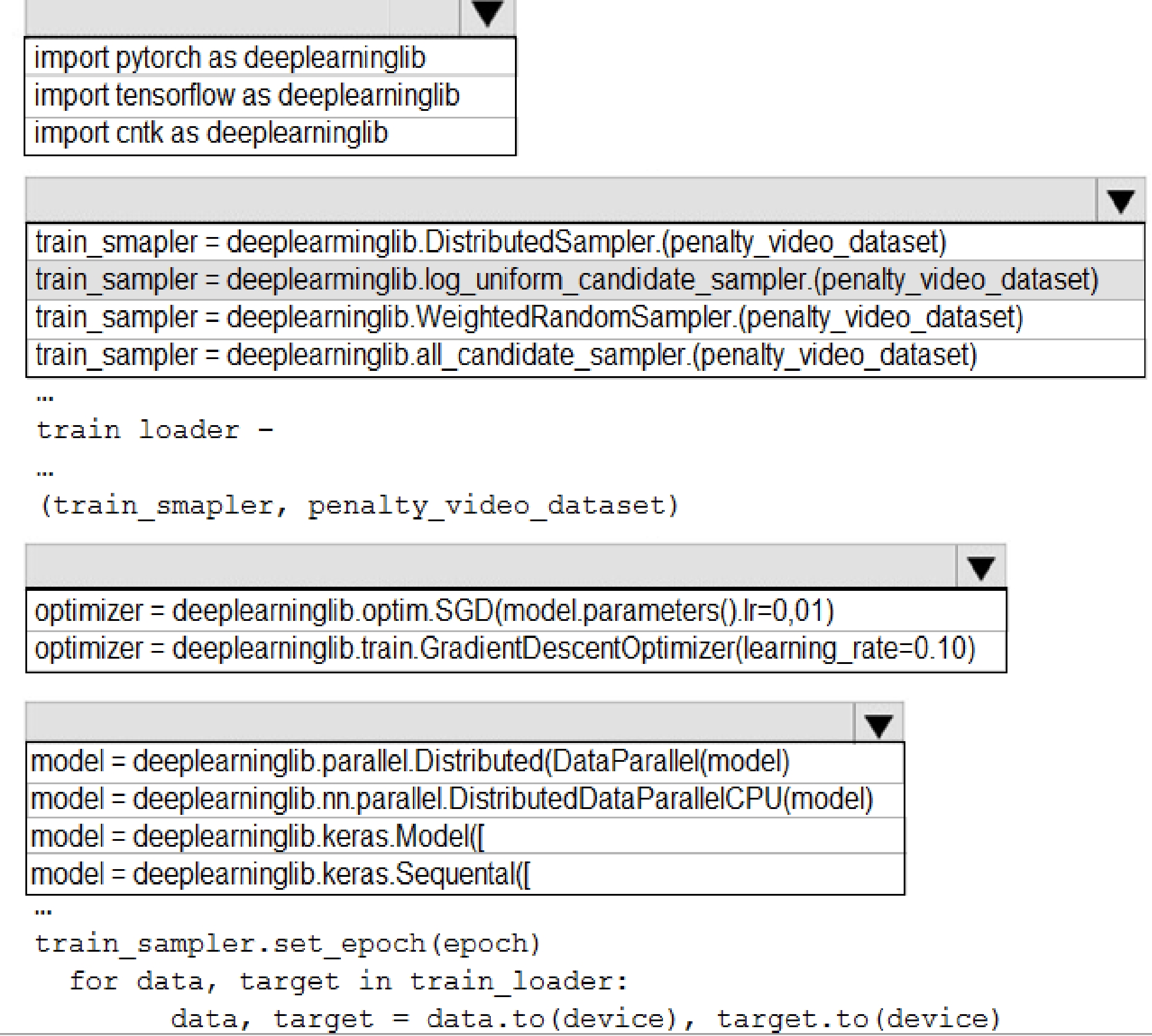

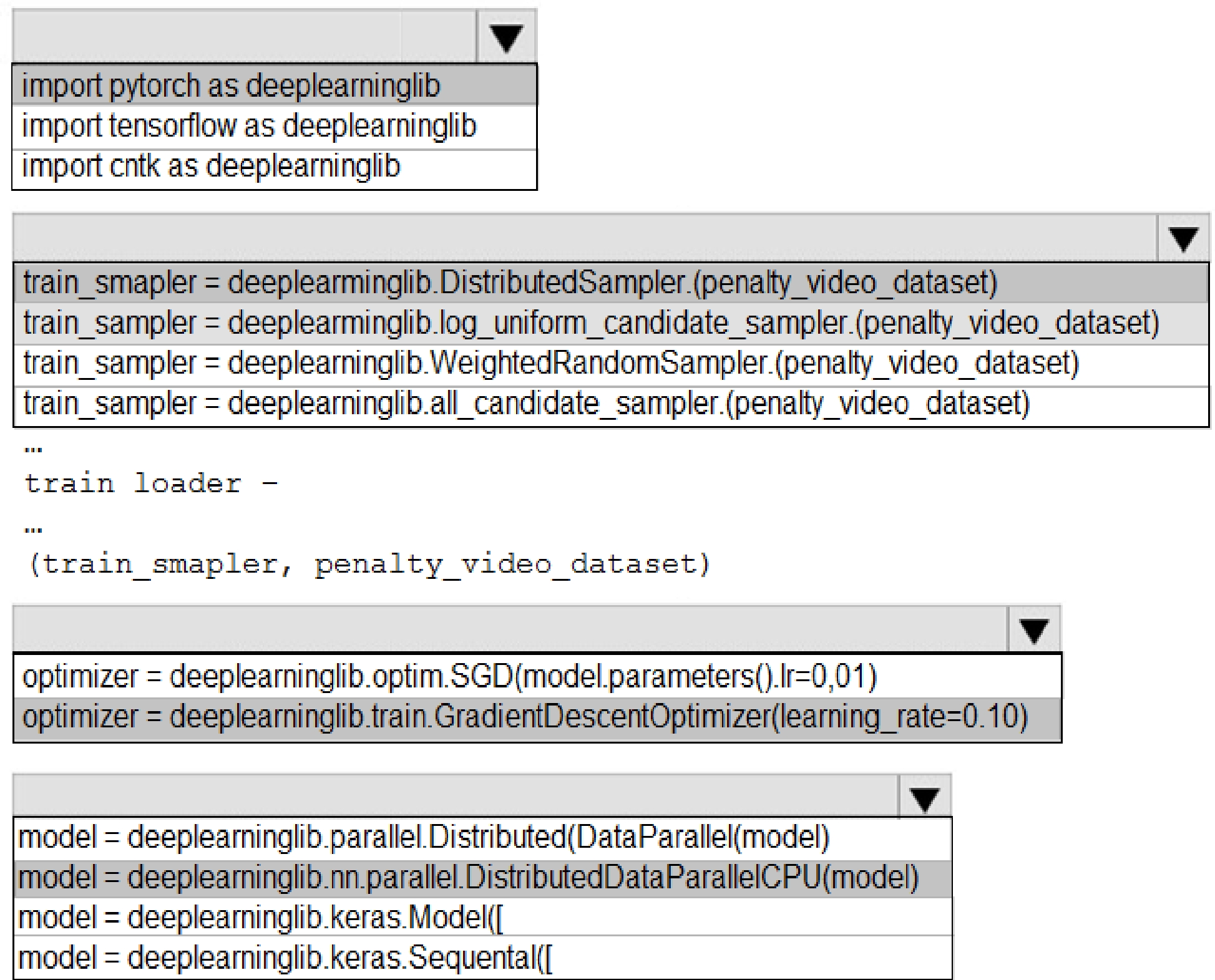

How should you complete the code segment? To answer, select the appropriate options in the

answer area.

NOTE: Each correct selection is worth one point.

Quiz

Overview

You are a data scientist in a company that provides data science for professional sporting events.

Models will be global and local market data to meet the following business goals:

• Understand sentiment of mobile device users at sporting events based on audio from crowd

reactions.

• Access a user's tendency to respond to an advertisement.

• Customize styles of ads served on mobile devices.

• Use video to detect penalty events.

Current environment

Requirements

• Media used for penalty event detection will be provided by consumer devices. Media may include

images and videos captured during the sporting event and snared using social media. The images

and videos will have varying sizes and formats.

• The data available for model building comprises of seven years of sporting event media. The

sporting event media includes: recorded videos, transcripts of radio commentary, and logs from

related social media feeds feeds captured during the sporting evens.

• Crowd sentiment will include audio recordings submitted by event attendees in both mono and

stereo

Formats.

Advertisements

• Ad response models must be trained at the beginning of each event and applied during the

sporting event.

• Market segmentation nxxlels must optimize for similar ad resporr.r history.

• Sampling must guarantee mutual and collective exclusivity local and global segmentation models

that share the same features.

• Local market segmentation models will be applied before determining a user’s propensity to

respond to an advertisement.

• Data scientists must be able to detect model degradation and decay.

• Ad response models must support non linear boundaries features.

• The ad propensity model uses a cut threshold is 0.45 and retrains occur if weighted Kappa

deviates from 0.1 +/-5%.

• The ad propensity model uses cost factors shown in the following diagram:

The ad propensity model uses proposed cost factors shown in the following diagram:

Performance curves of current and proposed cost factor scenarios are shown in the following

diagram:

Penalty detection and sentiment

Findings

• Data scientists must build an intelligent solution by using multiple machine learning models for

penalty event detection.

• Data scientists must build notebooks in a local environment using automatic feature engineering

and model building in machine learning pipelines.

• Notebooks must be deployed to retrain by using Spark instances with dynamic worker allocation

• Notebooks must execute with the same code on new Spark instances to recode only the source of

the data.

• Global penalty detection models must be trained by using dynamic runtime graph computation

during training.

• Local penalty detection models must be written by using BrainScript.

• Experiments for local crowd sentiment models must combine local penalty detection data.

• Crowd sentiment models must identify known sounds such as cheers and known catch phrases.

Individual crowd sentiment models will detect similar sounds.

• All shared features for local models are continuous variables.

• Shared features must use double precision. Subsequent layers must have aggregate running mean

and standard deviation metrics Available.

segments

During the initial weeks in production, the following was observed:

• Ad response rates declined.

• Drops were not consistent across ad styles.

• The distribution of features across training and production data are not consistent.

Analysis shows that of the 100 numeric features on user location and behavior, the 47 features that

come from location sources are being used as raw features. A suggested experiment to remedy the

bias and variance issue is to engineer 10 linearly uncorrected features.

Penalty detection and sentiment

• Initial data discovery shows a wide range of densities of target states in training data used for crowd

sentiment models.

• All penalty detection models show inference phases using a Stochastic Gradient Descent (SGD) are

running too stow.

• Audio samples show that the length of a catch phrase varies between 25%-47%, depending on

region.

• The performance of the global penalty detection models show lower variance but higher bias when

comparing training and validation sets. Before implementing any feature changes, you must confirm

the bias and variance using all training and validation cases.

models.

How should you complete the code segment? To answer, select the appropriate options in the

answer area.

NOTE: Each correct selection is worth one point.

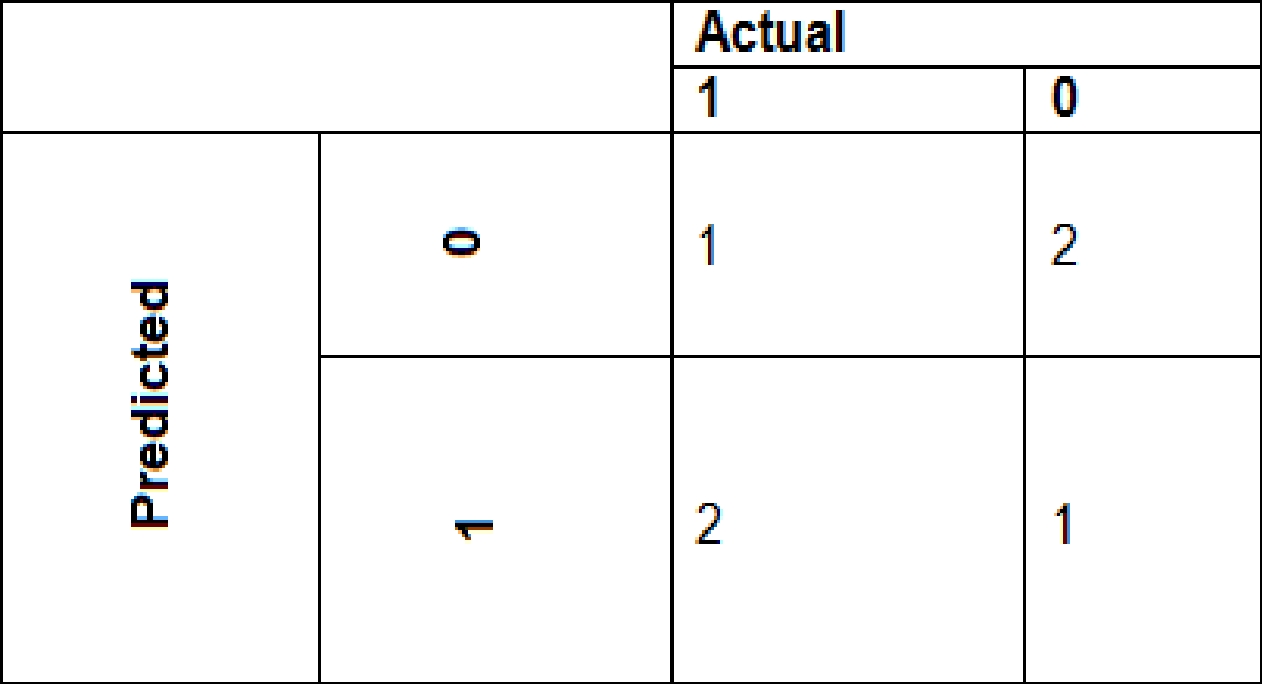

Box 1: import pytorch as deeplearninglib

Box 2: ..DistributedSampler(Sampler)..

DistributedSampler(Sampler):

Sampler that restricts data loading to a subset of the dataset.

It is especially useful in conjunction with class:`torch.nn.parallel.DistributedDataParallel`. In such

case, each process can pass a DistributedSampler instance as a DataLoader sampler, and load a

subset of the original dataset that is exclusive to it.

Scenario: Sampling must guarantee mutual and collective exclusively between local and global

segmentation models that share the same features.

Box 3: optimizer = deeplearninglib.train. GradientDescentOptimizer(learning_rate=0.10)

Incorrect Answers: ..SGD..

Scenario: All penalty detection models show inference phases using a Stochastic Gradient Descent

(SGD) are running too slow.

Box 4: .. nn.parallel.DistributedDataParallel..

DistributedSampler(Sampler): The sampler that restricts data loading to a subset of the dataset.

It is especially useful in conjunction with :class:`torch.nn.parallel.DistributedDataParallel`.

Reference:

[https://github.com/pytorch/pytorch/blob/master/torch/utils/data/distributed.py](https://github.com/pytorch/pytorch/blob/master/torch/utils/data/distributed.py)

Quiz

Overview

You are a data scientist in a company that provides data science for professional sporting events.

Models will be global and local market data to meet the following business goals:

• Understand sentiment of mobile device users at sporting events based on audio from crowd

reactions.

• Access a user's tendency to respond to an advertisement.

• Customize styles of ads served on mobile devices.

• Use video to detect penalty events.

Current environment

Requirements

• Media used for penalty event detection will be provided by consumer devices. Media may include

images and videos captured during the sporting event and snared using social media. The images

and videos will have varying sizes and formats.

• The data available for model building comprises of seven years of sporting event media. The

sporting event media includes: recorded videos, transcripts of radio commentary, and logs from

related social media feeds feeds captured during the sporting evens.

• Crowd sentiment will include audio recordings submitted by event attendees in both mono and

stereo

Formats.

Advertisements

• Ad response models must be trained at the beginning of each event and applied during the

sporting event.

• Market segmentation nxxlels must optimize for similar ad resporr.r history.

• Sampling must guarantee mutual and collective exclusivity local and global segmentation models

that share the same features.

• Local market segmentation models will be applied before determining a user’s propensity to

respond to an advertisement.

• Data scientists must be able to detect model degradation and decay.

• Ad response models must support non linear boundaries features.

• The ad propensity model uses a cut threshold is 0.45 and retrains occur if weighted Kappa

deviates from 0.1 +/-5%.

• The ad propensity model uses cost factors shown in the following diagram:

The ad propensity model uses proposed cost factors shown in the following diagram:

Performance curves of current and proposed cost factor scenarios are shown in the following

diagram:

Penalty detection and sentiment

Findings

• Data scientists must build an intelligent solution by using multiple machine learning models for

penalty event detection.

• Data scientists must build notebooks in a local environment using automatic feature engineering

and model building in machine learning pipelines.

• Notebooks must be deployed to retrain by using Spark instances with dynamic worker allocation

• Notebooks must execute with the same code on new Spark instances to recode only the source of

the data.

• Global penalty detection models must be trained by using dynamic runtime graph computation

during training.

• Local penalty detection models must be written by using BrainScript.

• Experiments for local crowd sentiment models must combine local penalty detection data.

• Crowd sentiment models must identify known sounds such as cheers and known catch phrases.

Individual crowd sentiment models will detect similar sounds.

• All shared features for local models are continuous variables.

• Shared features must use double precision. Subsequent layers must have aggregate running mean

and standard deviation metrics Available.

segments

During the initial weeks in production, the following was observed:

• Ad response rates declined.

• Drops were not consistent across ad styles.

• The distribution of features across training and production data are not consistent.

Analysis shows that of the 100 numeric features on user location and behavior, the 47 features that

come from location sources are being used as raw features. A suggested experiment to remedy the

bias and variance issue is to engineer 10 linearly uncorrected features.

Penalty detection and sentiment

• Initial data discovery shows a wide range of densities of target states in training data used for crowd

sentiment models.

• All penalty detection models show inference phases using a Stochastic Gradient Descent (SGD) are

running too stow.

• Audio samples show that the length of a catch phrase varies between 25%-47%, depending on

region.

• The performance of the global penalty detection models show lower variance but higher bias when

comparing training and validation sets. Before implementing any feature changes, you must confirm

the bias and variance using all training and validation cases.

What should you do?

Designing and Implementing a Data Science Solution on Azure Practice test unlocks all online simulator questions

Thank you for choosing the free version of the Designing and Implementing a Data Science Solution on Azure practice test! Further deepen your knowledge on Microsoft Simulator; by unlocking the full version of our Designing and Implementing a Data Science Solution on Azure Simulator you will be able to take tests with over 505 constantly updated questions and easily pass your exam. 98% of people pass the exam in the first attempt after preparing with our 505 questions.

BUY NOWWhat to expect from our Designing and Implementing a Data Science Solution on Azure practice tests and how to prepare for any exam?

The Designing and Implementing a Data Science Solution on Azure Simulator Practice Tests are part of the Microsoft Database and are the best way to prepare for any Designing and Implementing a Data Science Solution on Azure exam. The Designing and Implementing a Data Science Solution on Azure practice tests consist of 505 questions and are written by experts to help you and prepare you to pass the exam on the first attempt. The Designing and Implementing a Data Science Solution on Azure database includes questions from previous and other exams, which means you will be able to practice simulating past and future questions. Preparation with Designing and Implementing a Data Science Solution on Azure Simulator will also give you an idea of the time it will take to complete each section of the Designing and Implementing a Data Science Solution on Azure practice test . It is important to note that the Designing and Implementing a Data Science Solution on Azure Simulator does not replace the classic Designing and Implementing a Data Science Solution on Azure study guides; however, the Simulator provides valuable insights into what to expect and how much work needs to be done to prepare for the Designing and Implementing a Data Science Solution on Azure exam.

BUY NOWDesigning and Implementing a Data Science Solution on Azure Practice test therefore represents an excellent tool to prepare for the actual exam together with our Microsoft practice test . Our Designing and Implementing a Data Science Solution on Azure Simulator will help you assess your level of preparation and understand your strengths and weaknesses. Below you can read all the quizzes you will find in our Designing and Implementing a Data Science Solution on Azure Simulator and how our unique Designing and Implementing a Data Science Solution on Azure Database made up of real questions:

Info quiz:

- Quiz name:Designing and Implementing a Data Science Solution on Azure

- Total number of questions:505

- Number of questions for the test:50

- Pass score:80%

You can prepare for the Designing and Implementing a Data Science Solution on Azure exams with our mobile app. It is very easy to use and even works offline in case of network failure, with all the functions you need to study and practice with our Designing and Implementing a Data Science Solution on Azure Simulator.

Use our Mobile App, available for both Android and iOS devices, with our Designing and Implementing a Data Science Solution on Azure Simulator . You can use it anywhere and always remember that our mobile app is free and available on all stores.

Our Mobile App contains all Designing and Implementing a Data Science Solution on Azure practice tests which consist of 505 questions and also provide study material to pass the final Designing and Implementing a Data Science Solution on Azure exam with guaranteed success. Our Designing and Implementing a Data Science Solution on Azure database contain hundreds of questions and Microsoft Tests related to Designing and Implementing a Data Science Solution on Azure Exam. This way you can practice anywhere you want, even offline without the internet.

BUY NOW